- Sponsored Content

AI-Enabled Digital Twins in Biopharmaceutical Manufacturing

COURTESY OF TONI MANZANO AND AIZON; HTTPS://AIZON.AI

A digital twin (DT) is a theoretical representation or virtual simulation of an object or system composed of a computer model and real-time data (1). A DT model operates upon information received from sensors reporting about various aspects of the physical system and process, such as temperature, raw-materials levels, and product accumulation. From such data, the model can run simulations that enhance process development and optimization. It can predict specified outcomes, flag required actions, and even support closed-loop process control (2).

The biomanufacturing industry is familiar with dynamic mathematical models containing time-based derivative terms of relevant variables. Both these classical simulations and DTs are digital models that are replicating a system’s process, but DTs are distinctive in that they operate virtually in parallel with their real-world counterparts. And whereas a classical simulation typically reports about a particular process, a DT has the advantage of simulating many processes concurrently.

The robustness of advanced cloud technologies enables DTs to work in industrial environments that present highly controlled conditions — e.g., by operating without physical connectivity between a system and process controller. DTs can compile results from multiple models running contemporaneously and support self-monitored processes. Such capabilities excel in networks where continuous information exchange reinforces and improves the virtual representation of a physical system.

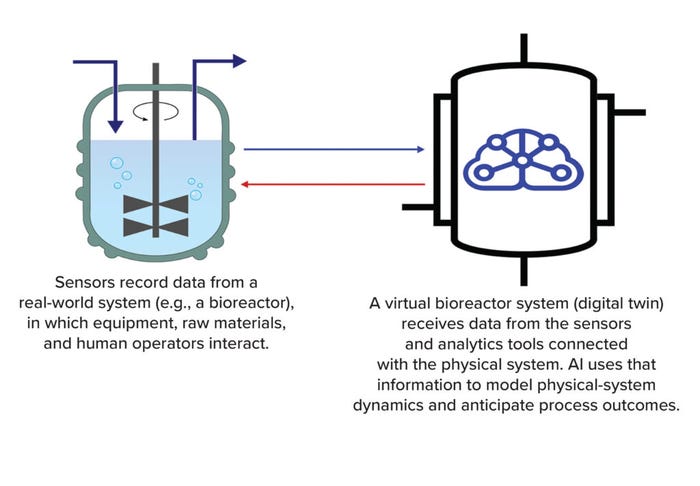

This reflects a major feature of DTs —their ability to accommodate multidirectional information flow (Figure 1). For instance, instrument or process sensors transfer information to the DT, providing relevant operational data to the model. A DT can communicate actionable insights to the personnel monitoring a system — or to the system itself. By processing continually updated data from multiple areas in the physical system, the powerful virtual environment of a DT represents a system’s status much more comprehensively and accurately than classical simulations can.

Figure 1: A digital twin is a virtual model of an object or process (AI = artificial intelligence).

During initial development of digital models, historical data from a physical system are used to create a “cybercopy.” Depending on the type and level of real-time connectivity between the copy and the physical system, three types of digital models can be created.

No Connection in Real Time: A cybercopy can serve simply as a model of a physical system. Such setups have proven to be useful during drug and medical-device development. In this situation, the virtual replica is not informed by real-time data; rather, the models present a static picture of how a physical system operated at a specific time. Data scientists can use such models to understand factors and values that determine the model’s broader theoretical framework.

One-Way Connection: In this case, connectivity runs from a physical system to a cybercopy. The digital model receives information in real time and updates continuously, enabling it to emulate conditions occurring in the real-world system. A one-way in silico replica can be useful for applying process analytical technology (PAT), real-time monitoring, and remotely initiated process control — all of which can improve an ongoing process.

Full Connection: Bidirectional connectivity enables a model to support a physical manufacturing system in a growing number of ways. Real-time updates to the model provide for such activities as intelligent closed-loop control. Thus, batches can be produced in the right way during every iteration through continuous monitoring and “smart” operation. We might think of a fully connected model as an airplane pilot who, in response to schedule changes or atmospheric events, provides input to flight control systems to adjust or maintain a flight path.

Physical reality must be measured and analyzed in real time to produce a contemporary, dynamic digital counterpart of an object or process. DTs are an example of the third scenario described above. The Fourth Industrial Revolution (Industry 4.0) — including such advances as the internet of things (IoT), cloud computing, and artificial intelligence (AI) — has enabled full implementation of DTs.

The associated technologies have advanced to such a point that a properly trained, AI-enabled DT will avoid limitations with customary approaches to process analysis, including poor results from human habits and prejudiced thinking. Beyond extrapolating insights from expertly correlated data within immense databases, DTs provide de novo, “outside-the-box” reasoning that generates new solutions from seemingly nonintuitive sources. And because AI-enabled DTs perform counterfactual analysis rapidly, they present answers to hypothetical situations in real time. For instance, they project what would have happened had a different control action been taken, or they identify what action would have been proposed had a measured parameter returned a different value. Rapidly generated hypothetical values provide clear evidence to defend a proposed process solution before making physical changes. Such values also provide useful means for identifying causative and correlative events for further consideration. In those respects, counterfactual analysis is a powerful tool for improving our understanding of both our physical systems and the solutions proposed by our models.

DT Functions and Applications

Scientists who do not work with AI sometimes conflate the different ways in which digital models, such as AI-empowered DT, can be used. That problem can occur even in defined applications such as medical (and now precision) diagnostics and biomanufacturing operations.

AI-supported models serve as tools for descriptive analysis, by which data from past events are collected and simply reported. One step further is a model-based diagnostic analysis, during which a model leverages known data relationships to shed light on correlations and/or causations in past events. Predictive analysis of an operation or tool evaluates past process performance, known associations, and even mechanistic knowledge to generate forecasts of progressive actions. Prescriptive analysis provides actionable insights — e.g., suggested parameter adjustments — to support open-loop process control. In its most advanced form, a model could provide direct and active closed-loop system control by actuating changes in multiple control elements to maintain a process in a desired state. With respect to risk management, it should be noted that an AI-enabled DT in a control loop can take action only upon defined manipulated variables within the system network.

Why AI Now?

Like the Guttenberg printer and the World Wide Web, OpenAI’s Generative Pre-Trained Transformer 4 (GPT-4) language model arguably represents a seismic shift in information technology (IT). That claim might or might not be an exaggeration; history will decide. But recent applications without doubt have categorically changed the world’s appreciation of AI’s speed, power, and potential. The only serious questions that remain are how to map, regulate, and control such technology.

Although the discipline of AI was created in the 1950s, it is still beginning to fulfill its promises because technologies only now have become powerful enough to feed and support it (see “Milestones” box on next page). As happened with the Internet, AI is gaining traction not only in academia and advanced business sectors, but also in democratized spaces. Society is quickly adopting AI as a standard tool precisely because the general public served as “early adopters” of the technologies needed to support it.

The recent emergence of successful AI applications derives more from advances in supporting technologies than from developments in their underlying algorithms. Functional applications of AI sputtered at first because processing speed and data accessibility were lacking. In fact, the field has suffered two “winters,” the first of which came in the 1970s when computing power was insufficient to operate the algorithms efficiently. The advent of personal computing rectified that situation. Then, during the 1990s, AI suffered its second winter because storage space and processing speeds could not handle the volumes of data that are required to support powerful and trustworthy AI model operation. Today, cloud technologies have solved such problems and are helping to democratize AI and its applications.

Recent applications have brought AI’s significant potential to the forefront of society in general. Scientific applications of “narrow” AI, such as the AlphaFold protein-structure database from DeepMind, have revolutionized structural biology by generating remarkably efficient and accurate three-dimensional (3D) protein-structure predictions based on peptide sequences. GPT-4 has inspired widespread debate upon the potentials and pitfalls of products using artificial general intelligence (AGI). For some time, such corporate giants as Google, Amazon, and Netflix have been analyzing consumer data to transform bytes into insights. Now, AI is enabling a transition from insights to knowledge.

AI is being applied in multiple industries and contexts to recognize patterns, detect outliers in data sets, predict outcomes, recommend strategies, control processes, analyze and emulate discrete speech, compose prose and poetry, and process images (3). Its image-processing capability is being exploited in such applications as facial recognition, operation surveillance, and medical-image screening. In diagnostic medicine, AI is used not only to enhance images, but also to screen them for patterns that correlate with particular diseases. Classification and segmentation are key powers of AI, and in medicine and robotics, those abilities are being harnessed to categorize and interpret images and/or to segment them based on their subcomponents.

A properly trained AI algorithm emulates human cognitive attributes within the domain that it addresses. But we humans are still needed to train AI applications in a process called alignment, and humans are needed to ensure that AI is applied, implemented, and maintained correctly.

From Programmable Logic to AI Knowledge

The code supporting programmable logic controller (PLC)–based processing continues to develop, drawing upon enhancing technologies as they appear. Digitalization represents the first step in bringing industrial data to the level of information, enabling transformation of PLC outputs into actionable insights. Previously, only classical statistics had been used — with human intervention —to transform information into manufacturing-process knowledge and control outputs. Although Shewhart control charts, trends, and related tools can display values in relation to control limits, such means provide oversimplified representations of manufacturing realities.

AI is now being applied broadly to support humans in transforming data from multivariate, real-world situations into comprehensive understanding (4). From specific applications such as spellcheck functions to more general tools, including those based on deep learning (DL), we believe that AI will provide comprehensive solutions to long-standing challenges. Just as robots assist or replace human bodies to perform given tasks, AI emulates human brains to simplify more complex work.

If an algorithm is the powerful engine in an AI-supported model, then data are the fuel. And as with any fuel, performance depends on both quantity and quality. In drug manufacturing, relevant data are generated over time from multiple sources. Advances in process monitoring, including new process analytics and real-time parameter sensors, are increasing bioprocess understanding (5, 6). The emergence of “-omics” fields has done much the same (7, 8). Individual pharmaceutical manufacturers now generate petabytes of such data per year. Unfortunately, that fuel often is stored, not used. Regardless of potential value, when gathered data simply fill digital space, as happens with up to 70% of the information generated at a given biomanufacturing site, companies lose the ability to gain process and product knowledge.

But that tendency is changing as developments in data science complement the aforementioned advances in computing power and data storage, enabling multidisciplinary approaches to ensuring data quality, relevance, and availability. Modern data science now involves mathematics, statistics, advanced programming, and AI to curate and govern large amounts of data for effective and efficient use (9–11). Cloud technologies also are providing the capabilities needed to augment computing disciplines and enable practical application in industry. A new concept termed data intentionality is inspiring alterations in current approaches to data generation and governance. Previously, companies intended to apply generated data for manual analysis and solution discovery. The data-intentionality concept instead suggests that mechanisms for data generation and management should be designed specifically to feed into AI-supported DTs.

The IoT connects physical objects to cloud capabilities, enabling secure data bridges, “big-data” storage, and unlimited parallel computing. Thus, multivariate techniques are applied to large data sets to produce cybercopies of the physical systems that produced the data. Seeking to coordinate such technologies within single systems, initiatives such as the push to adopt Unified Namespace (UNS) software layers will support DTs as the next step in the natural evolution of digitalization as applied to industrial activities and assets. Programs such as risk-based management (RBM) assess and govern data collection and distribution based upon analysis of the information’s value and of the consequences of failure to acquire such data.

Synthetic Knowledge in the Pharmaceutical Industry

Pharmaceutical applications requiring advanced data handling include drug discovery, development, and manufacturing, as well as clinical-trial enrollment, monitoring, and endpoint detection. Traditionally, pharmaceutical science has involved laboratory-scale studies using such rudimentary tools as bilinear factor models, which simplify complexity and suggest ways to reduce variability in results. Nevertheless, regulatory agencies have encouraged the pharmaceutical industry to address reality as it is, understanding it with all the nuance and complexity upon which it is built (12). Rather than simplify the biological, physical, and chemical nature of biomanufacturing, regulatory administrators now invite companies to use the newest available science to address all relevant parameters, including the inherent variability and complexity of biological products, manufacturing processes, and associated systems (13).

Today, we can evaluate both variability and complexity as natural parameters of a power equation managed by AI (14). In that context, synthetic knowledge refers to the ability of machines to analyze large amounts of dissimilar data, identify patterns, learn from them, and present conclusions or predictions that would be impossible for humans to conceive through even math-supported analysis.

Dismissing the historical approach of making univariate assumptions between predictor and target variables lets us work on reality as it is by means of synthetic knowledge. That includes leveraging AI-supported analysis of the petabytes of data that are collected throughout a drug’s product life cycle.

AI in Today’s Pharmaceutical Industry

AI is now a commodity and an accepted part of industry and society. Examples include how we trust “machine vision” to secure our telephones and how we rely on the recommendations of global positioning system (GPS) apps to arrive at our destinations on time. The pharmaceutical industry, too, is adopting the benefits of AI, but in merely opportunistic ways and not under a coherent strategy. AI has been demonstrated to be the best tool to solve problems with product yield, overall equipment effectiveness (OEE), deviation management, anomaly detection, and various demands for pattern recognition. AI is already addressing problems that could not be solved with old methods and technologies (e.g., classical statistics, linear regression, spreadsheets, and simplistic models). And it has succeeded because the realities of drug development and manufacturing are multifaceted, interactive, and continuously variable over a complete product life cycle.

Biomanufacturing processes are complex because of the many variables involved in their execution, interactions among those variables, and concerns about required external components. Such complexity makes it difficult to institute an accurate and robust management infrastructure for analytical control. AI will play a critical role in solving that problem, and the IoT is the perfect tool to connect, in real time, contemporary physical-world data produced by on-line monitoring with the infinite data storage and processing capabilities available in the cloud.

AI Along the Product Life Cycle

Specialization supports excellence in individual disciplines but can limit appreciation of broader implications. Many scientists value the power of AI in their areas of expertise but might be unaware of its growing scope of application throughout the pharmaceutical industry, from target discovery to postmarketing surveillance (15). For example, during product discovery and development, AI now improves construction of design-of-experiments (DoE) approaches based on the full spectrum of parameters influencing the biophysicochemical properties of the systems under investigation (16). In process development, AI is empowering DT analysis of heterogeneous data from numerous analytical instruments and probes, enabling multiparametric simulations (17, 18).

During clinical trials, AI has, for instance, helped to reduce bias in trial design through “in silico trials,” establishing patient-specific models to form virtual cohorts covering understudied populations (16). In process validation, scientists must develop extensive understanding of their analytical methods’ accuracy and efficiency. From process design (PD) to process qualification (PQ) and continued process verification (CPV), AI provides powerful tools for multivariate analytics that support real-time process monitoring and control (19).

Quality assurance/control (QA/QC) scientists are leveraging AI to discover and understand critical quality attributes (CQAs) and process parameters (CPP), develop quality control plans (QCPs), maintain electronic laboratory notebooks (ELNs) and laboratory information-management system (LIMS) dashboards, and support reduction and distribution of new data. In the realm of procurement, from inventory management to accounts receivable, AI supports such core functions as supply-chain management.

AI-enabled DTs are enhancing manufacturing activities, helping to produce optimal batches consistently by interpreting and sometimes acting upon real-time process data and by interacting with historical data files. Because DTs are cybercopies of physical manufacturing processes, they are ideal mechanisms by which to investigate and evaluate processes without compromising them. That capability makes it possible to simulate conditions and occurrences, predict undesired episodes, and recommend multivariate results that help to maximize efficient production of high-quality products.

In terms of product management and distribution, AI analysis of historic, current, and predicted data could increase flexibility and responsiveness, supporting better demand forecasts. Narrow AI algorithms supply practical information, including efficient shipping routes and currently inexpensive shipping modes and suppliers. During phase 4 trials (postmarketing surveillance), AI assists in monitoring, analysis, and alerts. It also facilitates data distribution required to assess a drug’s real-world performance, enabling early identification of rare side effects and other issues. And during product retirement, AI aids in managing voluminous interactive documentation; process, facility, and service management; environmental, health, and safety compliance; and operational, financial, and contractual activities.

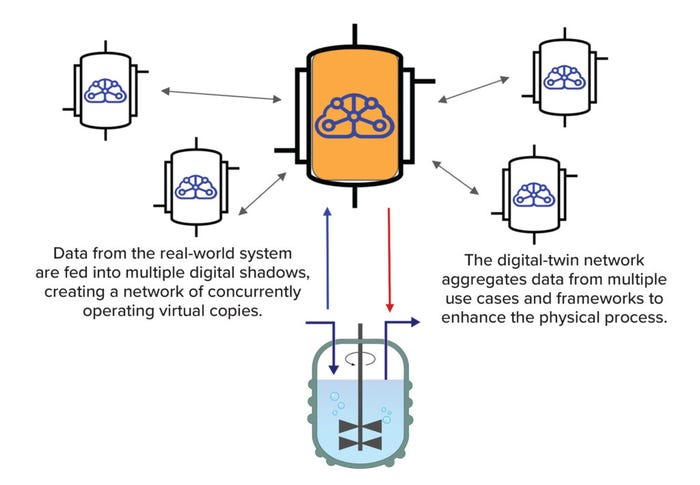

Although many benefits can come from deep understanding gained when AI is applied to historical data, even more possibilities emerge when such knowledge is managed in real time. That strategy requires adequate mechanisms (such as an AI-enabled DT) for consistent, bidirectional information exchange between the physical and virtual worlds. We also should note that it would be an error to create new silos of knowledge by maintaining DTs that do not interact with one another. Only when transverse or lateral processes are connected can you find underlying evidence of complexity across vertical operations within the same unit or network (Figure 2).

Figure 2: A digital twin can be configured in many ways, including as part of a network.

Biopharmaceuticals and DTs

Since the launch of Humulin U-100 biosynthetic insulin in 1982, the biologics industry has advanced considerably, not only in the numbers and types of products developed, but also in manufacturing technologies. Substantial developments have occurred in cell-line engineering, cell culture, product and process understanding, and process analytics and control.

Bioprocess data come with high dimensionality and complexity, which arise from interactions among multiple variables. The variety of data sources and formats presents operational issues, including variability and “noise” in measurement, both of which diminish standardization and interoperability. Such problems make it difficult, using classical techniques, to identify meaningful patterns within bioprocess data and to understand underlying relationships among many variables.

Advances in process monitoring and analytics have increased the volumes and types of data to be accounted for, driving demand for better data-processing and process-control techniques. Recent developments in automation, process intensification, and continuous processing have magnified such needs.

Meanwhile, data science, algorithmic capability, and computing power have progressed significantly. Currently, the only way to work with the vast stores and many different types of product and process data is through multivariate analysis based on advanced modeling techniques. Increasing standardization in data formats and protocols — and in techniques for data analysis and ML — is enabling researchers and engineers to analyze, model, and control bioprocesses based on data-driven approaches. DTs hold much promise for emulating the complexity of biological processes and for addressing all of the difficulties related to the continuous variability associated with biopharmaceutical operations (20, 21).

A DT Use Case: In classical batch manufacturing, active pharmaceutical ingredients (APIs) are made in intermittent steps that can take place at different times and even locations. In continuous manufacturing (CM), processes take place in one contiguous train, without interruption and with material charging and product discharging simultaneously. CM is complex, and an advanced dynamic-control system is required to monitor and interact with the equipment and products in real time. For example, it is necessary to control the dosing of starting materials — e.g., culture media — in proportion to their consumption during the process.

CM of small-molecule drugs has been popular for many years, and for decades, some biopharmaceutical companies have been implementing perfusion bioreactors to support continuous production. But uptake of CM has lagged in downstream operations. DTs could improve diafiltration and other downstream steps by recommending or even adjusting pump speeds to minimize the requisite number of cycles. Roche and Biogen already use continuous operations, and reports are emerging of CM for both up- and downstream processes (22). Such developments raise the prospect of end-to-end, integrated CM for small volumes of some advanced therapies and large volumes of protein biologics (23, 24).

Setting Sail for Bioprocessing 4.0

Advances in process monitoring, data accessibility, and computing power are fueling the power of AI-enabled models, as is the democratization of such technology. DTs can rapidly reduce the high dimensionality, format complexity, and diversity of measurements in biopharmaceutical activities to actionable conclusions. For example, as trained by appropriate subject-matter experts (SMEs), DTs offer the promise of producing all batches under the expected conditions, all day and every day, without operator attention. Bioprocess understanding, development, and control are improving significantly as the industry implements AI-enabled DTs. Jay Baer (president of marketing consultancy Convince & Convert) recently tweeted, “We are surrounded by data but starved for insights.” If data are the winds behind Bioprocessing 4.0, then AI-enabled DTs are the sails.

References

1 Liu X, et al. Systematic Review of Digital Twin About Physical Entities, Virtual Models, Twin Data, and Applications. Adv. Eng. Informatics 55, 2023: 101876; https://doi.org/10.1016/j.aei.2023.101876.

2 Attaran M, Celik BG. Digital Twin: Benefits, Use Cases, Challenges, and Opportunities. Decis. Anal. J. 6, 2023: 100165; https://doi.org/10.1016/j.dajour.2023.100165.

3 Institute for Human-Centered Artificial Intelligence. AI Index Report. Stanford University: Stanford, CA, 2023; https://aiindex.stanford.edu/report.

4 Manzano T, Whitford W. AI Applications for Multivariate Control in Drug Manufacturing. A Handbook of Artificial Intelligence in Drug Delivery. Philip A, et al., eds. Elsevier: Amsterdam, Netherlands, 2023; https://shop.elsevier.com/books/a-handbook-of-artificial-intelligence-in-drug-delivery/philip/978-0-323-89925-3.

5 Iyer MS, Pal A, Venkatesh KV. A Systems Biology Approach To Disentangle the Direct and Indirect Effects of Global Transcription Factors on Gene Expression in Escherichia coli. Microbiol. Spectrum 11(2) 2023: e02101-22; https://doi.org/10.1128/spectrum.02101-22.

6 Corredor C, et al. Process Analytical Technologies (PAT) Applications for Upstream Biologics. Amer. Pharm. Rev. 18 April 2022; https://www.americanpharmaceuticalreview.com/Featured-Articles/585192-Process-Analytical-Technologies-PAT-Applications-for-Upstream-Biologics.

7 Farouk F, Hathout R, Elkady E. Resolving Analytical Challenges in Pharmaceutical Process Monitoring Using Multivariate Analysis Methods: Applications in Process Understanding, Control, and Improvement. Spectroscopy 38(3) 2023: 22–29; https://www.spectroscopyonline.com/view/resolving-analytical-challenges-in-pharmaceutical-process-monitoring-using-multivariate-analysis-methods-applications-in-process-understanding-control-and-improvement.

8 Gerzon G, Sheng Y, Kirkitadze M. Process Analytical Technologies — Advances in Bioprocess Integration and Future Perspectives. J. Pharm. Biomed. Anal. 207, 2022: 114379; https://doi.org/10.1016/j.jpba.2021.114379.

9 Han H, Trimi S. Towards a Data Science Platform for Improving SME Collaboration Through Industry 4.0 Technologies. Technol. Forecast. Social Change 174, 2022: 121242; https://doi.org/10.1016/j.techfore.2021.121242.

10 Shi Y. Advances in Big Data Analytics: Theory, Algorithms, and Practices. Springer Nature: Singapore, 2022; https://doi.org/10.1007/978-981-16-3607-3.

11 Wang J, et al. Big Data Analytics for Intelligent Manufacturing Systems: A Review. J. Manufact. Sys. 62, 2022: 738–752; https://doi.org/10.1016/j.jmsy.2021.03.005.

12 ICH Q8(R2). Pharmaceutical Development. European Medicines Agency: Amsterdam, Netherlands, 22 June 2017; https://www.ema.europa.eu/en/ich-q8-r2-pharmaceutical-development-scientific-guideline.

13 Using Artificial Intelligence & Machine Learning in the Development of Drug and Biological Products: Discussion Paper and Request for Feedback. US Food and Drug Administration: Silver Spring, MD, May 2023; https://www.fda.gov/media/167973/download.

14 Mozaffar M, et al. Mechanistic Artificial Intelligence (Mechanistic-AI) for Modeling, Design, and Control of Advanced Manufacturing Processes: Current State and Perspectives. J. Mater. Process. Technol. 302, 2022: 117485; https://doi.org/10.1016/j.jmatprotec.2021.117485.

15 Bhattamisra SK, et al. Artificial Intelligence in Pharmaceutical and Healthcare Research. Big Data Cogn. Comput. 7(1) 2023: 10; https://doi.org/10.3390/bdcc7010010.

16 Kolluri S, et al. Machine Learning and Artificial Intelligence in Pharmaceutical Research and Development: A Review. AAPS J. 24, 2022: 19; https://doi.org/10.1208/s12248-021-00644-3.

17 Zhihan LV, et al. Artificial Intelligence in Underwater Digital Twins Sensor Networks. ACM Trans. Sen. Netw. 18(3) 2022: 39; https://doi.org/10.1145/3519301.

18 Osamy W, et al. Recent Studies Utilizing Artificial Intelligence Techniques for Solving Data Collection, Aggregation and Dissemination Challenges in Wireless Sensor Networks: A Review. Electronics 11(3) 2022: 313; https://doi.org/10.3390/electronics11030313.

19 Ondracka A, et al. CPV of the Future: AI-Powered Continued Process Verification for Bioreactor Processes. PDA J. Pharm. Sci. Technol. 77(3) 2023: 146–165; https://doi.org/10.5731/pdajpst.2021.012665.

20 Isuru A, et al. Digital Twin in Biomanufacturing: Challenges and Opportunities Towards Its Implementation. Sys. Microbiol. Biomanufact. 1, 2021: 257–274; https://doi.org/10.1007/s43393-021-00024-0.

21 Alavijeh MK, et al. Digitally Enabled Approaches for the Scale-Up of Mammalian Cell Bioreactors. Dig. Chem. Eng. 4, 2022: 100040; https://doi.org/10.1016/j.dche.2022.100040.

22 Ramos I, et al. Fully Integrated Downstream Process To Enable Next-Generation Manufacturing. Biotechnol. Bioeng. 23 March 2023; https://doi.org/10.1002/bit.28384.

23 Schwarz H, et al. Integrated Continuous Biomanufacturing on Pilot Scale for Acid-Sensitive Monoclonal Antibodies. Biotechnol. Bioeng. 119(8) 2022: 2152–2166; https://doi.org/10.1002/bit.28120.

24 Final Conference Program. Integrated Continuous Biomanufacturing V: Back to Barcelona — Progress & Potential of ICB. Engineering Conferences International: Sitges, Spain, 9–13 October 2022; https://engconf.us/conferences/biotechnology/integrated-continuous-biomanufacturing-v.

Toni Manzano is cofounder and chief scientific officer of Aizon, C/ de Còrsega, 301, 08008 Barcelona, Spain; 34-937-68-46-78; [email protected]; https://www.aizon.ai.

BPI editorial advisor William Whitford is life sciences strategic solutions leader at Arcadis DPS Group; [email protected]; https://www.dpsgroupglobal.com.

You May Also Like