Voices of Biotech

Podcast: MilliporeSigma says education vital to creating unbreakable chain for sustainability

MilliporeSigma discusses the importance of people, education, and the benefits of embracing discomfort to bolster sustainability efforts.

Some people have found significant disillusionment regarding artificial intelligence’s (AI’s) limitations in application. For example, mass-media productions (e.g., Ex Machina) encourage the goal of achieving general AI or super AI, which supplies comprehensive, self-instituted results. In truth, narrow AI — which addresses only one task and provides specific results — is growing rapidly, both in power and number of applications (1). Although many different modeling methods remain dominant, AI is providing significant and increasing value in drug discovery, process development, transfer, and manufacturing (2).

In general, past renowned failures of AI applications have not resulted from limitations in algorithms. Disillusionment with AI, mainly because of limitations in supporting technologies, led to the “second winter” of AI between 1987 and 1990 (3). Over that period, application success was impeded by the slow speed, low capacity, and poor approaches to data handling and the limitations in data transmission and storage. More and better data for pharmaceutical process development and control are coming from the use of new monitoring technologies and analytics, networking systems, cloud storage, and data labeling and structure management.

Dealing with Data

We’ve heard for some time that data are the key to efficiency in accomplishing AI goals. But there is now more to the story than “big data.” For example, AI guru Andrew Ng explains that because of the refinement accomplished in AI tools, he now advocates for an even deeper focus on data sets. He defines a second-generation data-centric AI approach as engineering higher quality and relevance into data sets.

Although Ng acknowledges that the approach improves as more data become available, he is now promoting the concept that smaller, properly managed data sets can serve the same purpose (4). Data preparation must be considered as a critical step for AI systems in biopharmaceutical operations because the quality of raw information used to feed AI models is the major determinant in the efficacy of their outputs. Vas Narasimhan, CEO of Novartis, has emphasized the need to guarantee the required quality of data before their use for AI purposes (5).

The two basic approaches AI uses in handling volumes of data are referred to as supervised and unsupervised learning. In supervised learning, the machine is taught by example, and an algorithm is provided with a data set and the desired inputs and outputs. The machine then must find a method to produce those defined types of inputs and outputs.

In unsupervised learning the machine studies data to identify patterns. There are no example successes to train from or human instructions as guidance. The machine discovers associations and relationships through analysis of a data set. That can involve many ways of labeling, grouping or arranging data (e.g., vectors and matrices) that help to reveal such correlations. For example, the ability to design the output of a machine learning (ML) model as a vector supports that output’s efficient use of data in subsequent algorithms.

Applications involving those learning approaches include quality checks and digital inspections of aseptically prefilled syringes, vials, and cartridges. One type of supervised system uses ML for rapid monitoring of finished units. It recognizes nonvalid patterns in digital images of unit components by splitting the image into digestible parts (6). In that way, AI applies a function akin to human cognition at high speed and performance, after the right data are used to train models.

The Devil in the Details

Classic approaches required users to work with aggregated statistical variables to represent large data sets (e.g., large, time-series data). In so doing, users would lose opportunities for deeper understandings available from only an entire data set. Derivations such as mean, standard deviation, and quartiles are used to compare variables by aggregating and collapsing large series of numbers into just a few representative factors. Although that strategy is highly effective in many circumstances, it misses important dynamics in others because it masks the rich granularity of the actual data.

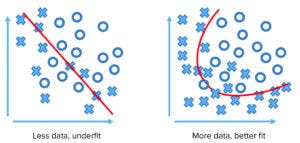

Figure 1: Limited data points lead to underfitting and faulty assessment.

AI and big-data mechanisms can work with native data sets and interpolate within existing gaps between points to create accurate patterns, correlations, and even causal relationships. Such abilities can be realized in solving problems arising from analytics that underfit existing data or from a lack of data that do not represent the phenomenon adequately. Limited or misapplied models and deficiencies in a data set can lead to erroneous conclusions. Figure 1 shows how limited data can lead to poor modeling of a phenomenon.

Thus, granularity (specificity of detail) in data assessment becomes essential in genomics, for example, for which the usual statistical aggregation and distributions cannot work, and every single unit of information (e.g., a single nucleotide polymorphism in DNA) counts in research. Examples of AI power include effective classifications of cancer using DNA microarrays (7), AI applications for blood-based DNA epigenomic prediction of cerebral palsy (8), and AI applications for detection of chemical–biological interactions associated with oxidative stress and DNA damage (9). Those examples also demonstrate success from a data-management perspective because such projects with the human genome require data storage of about 3 GB.

Development to Deployment

Candidly, linear models in analysis are the most common approach for nearly all processes in pharmaceutical science. However, the reality behind the mathematics is seldom linear. Most pharmaceutical operations are based on many nonlinear processes in which physical and chemical interactions require complicated engineering expressions to control the systems.

Inherent multivariability and complexity exist in the interactions of raw materials with equipment, human labor, and operational environments for manufacturing active pharmaceutical ingredients (APIs). Such interactions can be managed with mathematical accuracy only by assuming their inherent complexity as part of both the problem statements and solutions. Nevertheless, critical processes still are controlled by using monovariable approaches and by checking the values generated in production in isolated ways. The only possible goal here is to ensure that such values are individually between limits, without being able to consider their continuous interaction and multivariable relationship.

Biopharmaceutical manufacturing can be considered as an example operation that brings complex systems, potential variability, and control parameter sensitivity to a manufacturing processes. This complexity originates from the inhomogeneous, nonequilibrium, and dynamic systems in bioprocesses. One concern is exemplified by the current use of principal component analysis (PCA) and partial least squares (PLS), which are useful tools for establishing links among formulation parameters, processing variables, product quality attributes, and performance characteristics. Although such approaches can reduce the dimensionality of complex process data based on multivariable approaches, they nevertheless assume linearity.

Linear models such as those based on multiple linear regressions and multiple single-variate analyses have been popular in both pharmaceutical process development and operations. A good mathematical analogy is the strength and limitations of approximations through the Taylor series in nonpolynomial functions. However, the historic use of such linear models in bioprocess development and biomanufacturing that involve such nonlinear systems (and those involving multicollinearity) indicates the previous lack of better approaches.

AI offers many diverse algorithms to choose from in creating realistic mathematical models to explain nonlinear behaviors accurately. Hybrid models formed by orthogonal analytical approaches and AI-based data analysis combine strengths to identify complex system behaviors in product and process design. The “CPV of the Future” is an initiative from the Parenteral Drug Association in which pharmaceutical companies and nonprofit organizations are collaborating to develop advanced continued process verification (CPV) systems to control upstream processes, including those developed using AI (9).

The appearance of commercial applications of AI in process development by pharmaceutical entity providers, consultant services, and AI product distributors is evidence of a growing appreciation for AI. For example, Cytiva’s recent partnership with Nucleus Biologics enables biomanufacturers to use AI power in cell culture media development. Users can formulate their own custom cell-culture media through the interface of expert metabolite and formulation sourcing with AI-driven data processing (11).

Data Determines

Using large amounts of well-managed data is important because the suite of algorithms we refer to as “AI” is shockingly good at some tasks and remarkably bad at others. For example, AI is phenomenal at recognizing correlations in enormous (even poorly managed) multidimensional data sets and analyzing complex problems in terms of a previously detailed and interdependent set of rules. However, even the most advanced expert systems of today are very poor at the practical application of even mildly abstract concepts or taxonomies (12).

For example, humans can enjoy the struggle of memory games, such as with a deck of cards turned face down. It is incredibly easy for AI to play such games because computer “vision” recognizes an up-turned card and never forgets where it is when turned face-down.

On the other hand, it is rather easy for humans to understand accurately the concept of a “vehicle,” for example. That understanding allows people to easily recognize even a new type of vehicle, such as a combat tank with treads. But consider the following scenario with AI: We can build models from thousands of example vehicles for AI to use (e.g., those with convolutional neural networks in deep learning frameworks). AI then can recognize an automobile accurately, for example, in any orientation, in a new photo. However, it can be shown that current AI approaches, including “expert systems” based upon large sets of rules, don’t truly understand the idea of what a vehicle is in the abstract, much less robustly apply such an understanding in practice. For example, a cannon can have wheels and a large opening in its structure, but it is not a vehicle (Figure 2).

Figure 2: Is a cannon a coach? Although that question is easy for us to answer, AI has trouble with the abstract concept of a vehicle.

AI in Product Development

The power of AI in biopharmaceutical development isn’t simply a desired expectation; it is being demonstrated now. Anastasia Holovchenko, a physicist at Janssen Pharmaceuticals, recently reported that the company had “developed an automated imaging program that enables AI computer vision for formulation screening purposes” (12).

AI also aids in cell-culture development. Cellular metabolism and gene expression can be represented as a set of rules, which expert systems can use in interpreting experimental data. ML tools can use large (or highly managed) data sets to rapidly examine, filter, and catalog scalar or matrixed data to a solution. ML-empowered digital twins are in silico models of equipment or processes that can be used to predict future states of equipment or processes.

One mistake being made is thinking that creating a model of something will automatically deliver value (14). In fact, creating a model requires asking the right questions, using appropriate data sets, and choosing the best algorithm. AI-based supervised learning analysis of both incoming data and a modeling output assists greatly in deriving value from even a well-trained model.

AI’s power in biopharmaceutical target and entity discovery was illustrated when Insilico Medicine announced the nomination of a novel target and preclinical candidate therapeutic designed by the Pharma.AI platform (15). Its AI-powered discovery tools were used for target selection and prioritization, followed by their unique product development engine. The highly integrated architecture of the company’s AI platform allowed for linking biology and chemistry into a smooth, well-orchestrated research workflow.

AI in Process Development

Critical factors considered in establishing process operating conditions are indicated by the math, science, and data gained in laboratory experiments. AI’s expert systems are providing significant benefit in such activities. However, scale-up and technical-transfer processes subsequently can be impeded by flawed assumptions and imperfect models. That can occur even if all essential prescribed process improvements, product stability factors, new analytics, assay variability, geographical placement considerations, and scale factors have been identified.

Process verification and product comparability exercises can be difficult because many models rely on oversimplified equations and do not address the actual continuous multidimensional variability (e.g., raw materials, environmental conditions, operators, control actions) and complexity of a system (e.g., continuous and multivariable dependencies). Here again, AI’s comprehensive use of all data and the availability of diverse algorithms are coming to the rescue.

The need for accurate model development becomes even more apparent when a European Medicines Agency (EMA) and/or Food and Drug Administration (FDA) quality by design (QbD)-determined design space verification is attempted. A design space is a multidimensional combination and interaction of material attributes and process parameters demonstrated to provide product quality. Generally, it is established at early stages of product development, based on experiments conducted at laboratory or pilot scale.

Many factors are used to establish confidence in a multidimensional design space at commercial scale. One such factor is scale dependency — for underlying input process parameters, material attributes, and their multivariable interactions. Experienced commercial vendors of comprehensive AI now are valuable in the identification of relevant factors that define a multivariate design space to control the drug manufacturing process within a real CPV strategy (16, 17).

AI’s maturity for process analysis and prediction in support of digital twin technology is evidenced by the success of Insilico Biotechnology’s mechanistic and material balance “rules” in handling volumes of process data. “We have developed rules using the biochemical pathway in cells for the models. These are combined with process data, which can be exploited using artificial intelligence to predict cell culture performance and flag future deviations,” says Klaus Mauch, the company’s chief executive officer (18).

The First Steps of AI

The incredible amount of data generated from processes (by both classical and novel means) represents only the first step to efficient product and process development, technical transfer, and model-based control. Nonlinear strategies and complex system management are two pillars of AI. Its unsupervised and supervised algorithms, and deep, recurrent learning elucidate details from the full complement of data sets. All details that can be mathematically detected are included in the analysis. AI is changing our relationship to biopharmaceutical process data, from the amount of data addressed to the depth and specificity of analytic approaches.

References

1 Larkin Z. General AI vs Narrow AI. Levity: Berlin, Germany, 28 October 2021; https://levity.ai/blog/general-ai-vs-narrow-ai#:~:text=In%20simple%20terms%2C%20Narrow%20AI,and%20skills%20in%20different%20contexts.

2 Manzano T, Canals A. Measuring Pharma’s Adoption of Industry 4.0. Pharm. Eng. January–February 2022; https://ispe.org/pharmaceutical-engineering/january-february-2022/measuring-pharmas-adoption-industry-40.

3 Toosi A, et al. A Brief History of AI: How to Prevent Another Winter (A Critical Review). PET Clin. 16(4) 2021, 449–469; https://doi.org/10.1016/j.cpet.2021.07.001.

4 Strickland E. Andrew Ng: Unbiggen AI — The AI Pioneer Says It’s Time for Smart-Sized, “Data-Centric” Solutions to Big Issues. IEEE Spectrum, 9 February 2022; https://spectrum.ieee.org/andrew-ng-data-centric-ai.

5 Shaywitz D. Novartis CEO Who Wanted to Bring Tech Into Pharma Now Explains Why It’s So Hard. Forbes, 16 January 2019; https://www.forbes.com/sites/davidshaywitz/2019/01/16/novartis-ceo-who-wanted-to-bring-tech-into-pharma-now-explains-why-its-so-hard/?sh=3bfd74a87fc4.

6 Regmi H, et al. A System for Real-Time Syringe Classification and Volume Measurement Using a Combination of Image Processing and Artificial Neural Networks. J. Pharm. Innov. 14(4) 2019: 341–358; https://doi.org/10.1007/s12247-018-9358-5.

7 Greer BT, Khan J. Diagnostic Classification of Cancer Using DNA Microarrays and Artificial Intelligence. Ann. NY Acad. Sci. 1020(1) 2004: 49–66; https://doi.org/10.1196/annals.1310.007.

8 Bahado-Singh RO, et al. Deep Learning/Artificial Intelligence and Blood-Based DNA Epigenomic Prediction of Cerebral Palsy. Int. J. Mol. Sci. 20(9) 2019: 2075; https://doi.org/10.3390/ijms20092075.

9 Davidovic L, et al. Application of Artificial Intelligence for Detection of Chemico-Biological Interactions Associated with Oxidative Stress and DNA Damage. Chem. Biol. Interact. 25 August 2021: 109533; https://doi.org/10.1016/j.cbi.2021.109533.

10 Manzano T. PDA Study Explores Role of A.I. in CPV. PDA Letter 7 April 2020; https://www.pda.org/pda-letter-portal/home/full-article/pda-study-explores-role-of-a.i.-in-cpv#:~:text=The%20complexities%20associated%20with%20drug,involved%20and%20the%20interconnected%20processes.

11 Taylor BN. Cytiva and Nucleus Biologics Join Forces. Nucleus Biologics: San Diego, CA, 20 January 2022; https://nucleusbiologics.com/resources/cytiva-nucleus-biologics-join-forces.

12 Elliott T. The Power Of Artificial Intelligence Vs. The Power of Human Intelligence. Forbes 9 March 2020; https://www.forbes.com/sites/sap/2020/03/09/the-power-of-artificial-intelligence-vs-the-power-of-human-intelligence/?sh=4762038f346c.

13 Spinney L. Are We Witnessing the Dawn of Posttheory Science? The Guardian 9 January 2022; https://www.theguardian.com/technology/2022/jan/09/are-we-witnessing-the-dawn-of-post-theory-science.

14 Expert Q&A: What Is and Is Not a Digital Twin? ThoughtWire: Toronto, Canada, 30 July 2020; https://blog.thoughtwire.com/what-is-and-is-not-a-digital-twin.

15 AI-Discovered Novel Antifibrotic Drug Goes First-in-Human. Insilico Medicine: New York, NY, 30 November 2021; https://insilico.com/blog/fih.

16 Manzano T, et al. Artificial Intelligence Algorithm Qualification: A Quality by Design Approach to Apply Artificial Intelligence in Pharma. PDA J. Pharm. Sci. 75(1) 2021: 100–118; https://doi.org/10.5731/pdajpst.2019.011338.

17 Empowering SMEs Through 4IR Technologies: Artificial Intelligence. United Nationals Industrial Development Organization: Vienna, Austria; https://hub.unido.org/sites/default/files/publications/Empowering%20SMEs%20through%204IR%20Technologies.pdf.

18 Markarian J. Opportunities for Digital Twins. BioPharm Int. 34(9) 2021: 30–33; https://www.biopharminternational.com/view/opportunities-for-digital-twins.

William G. Whitford is life science strategic solutions leader at DPS Group and Editorial Advisory Board member of BioProcess International; 1-435-757-1022; [email protected]; www.dpsgroupglobal.com. Toni Manzano is chief scientific officer and cofounder at Aizon and lecturer at Universitat Autònoma de Barcelona; 34-627-454-605; [email protected]; https://www.aizon.ai.

You May Also Like