Voices of Biotech

Podcast: MilliporeSigma says education vital to creating unbreakable chain for sustainability

MilliporeSigma discusses the importance of people, education, and the benefits of embracing discomfort to bolster sustainability efforts.

To monitor the control and consistency of products derived from biological systems, a broad array of analytical methods are used for biopharmaceutical release and stability testing. These methods include both classical and state-of-the-art technologies as well as new technologies as they emerge over time.During the life cycle of a product, several reasons can arise for making changes in existing analytical methods: e.g., improved sensitivity, specificity, or accuracy; increased operational robustness; streamlined workflows; shortened testing times; and lowered cost of testing. Note that replacing an existing method is not the same as adding a new method to a release or stability test panel. An added method has no previous data sets, so there is nothing to be “bridged.”

But an existing method is tied to the historical data sets it produced (e.g., for Certificate of Analysis tests) and may still be producing (e.g., for ongoing stability protocols). Thus, significant changes in the method can create a substantial discontinuity between past and future data sets. Also, specification acceptance criteria that were based on historical data from the existing method could be affected by the measurement capabilities of the new method. So it is necessary to conduct an appropriately designed method-bridging study to demonstrate suitable performance of the new method relative to the one it is intended to replace.

Note that a method-bridging study is distinctly different from a methodtransfer study. Method transfers demonstrate comparable performance of a method that exists in one laboratory in another. Neither is method-bridging the same as adding a new assay to an existing analytical testing regimen. A method introduced de novo is not linked to an existing data set generated by an older method that is being discontinued.

In January 2014, a CASSS Chemistry, Manufaturing, and Controls Strategy Forum explored technical and quality issues associated with replacement of existing analytical methods by new methods during development — how that transition could be achieved smoothly while maintaining phase-appropriate regulatory compliance. Representatives from the US Food and Drug Administration’s (FDA’s) Center for Drug Evaluation and Research (CDER) and Center for Biologics Evaluation and Research (CBER) presented their agencies’ expectations for transitions in analytical methods. They also provided highlights of relevant examples. Additional regulatory perspectives were contributed by Health Canada representatives during panel discussions.

Forum Chairs and Speakers

Chairs: Nadine Ritter (president and principal analytical advisor of Global Biotech Experts, LLC), Reb Russell (executive director of molecular and analytical development at Bristol-Myers Squibb Company), and Timothy Schofield (senior fellow in regulatory sciences and strategy for MedImmune) |

Speakers: Laurie Graham (acting team leader of the division of monoclonal antibodies at FDA’s Center for Drug Evaluation and Research); Paul Dillon (principal scientist in manufacturing sciences and technology at Pfizer Ireland Pharmaceuticals); Frank Maggio (senior scientist at Amgen Inc.); Lokesh Bhattacharyya (chief of the laboratory of analytical chemistry and blood related products at FDA’s Center for Biologics Evaluation and Research); Dieter Schmalzing (principal technical advisor) and Wei-Meng Zhao (QC senior scientist) from Genentech, a member of the Roche Group; and Kenneth Miller (senior scientist) and Harry Yang (senior director and head of nonclinical biostatistics) from MedImmune, a member of the AstraZeneca Group |

Several companies presented their experiences and recommendations for bridging analytical methods during product development and after commercialization. With permission, some speaker slides are posted on the CASSS website (http://casss.site-ym.com/?CMCJ1407) along with forum summary slides. Panel and audience discussions addressed key questions on

how bridging studies should be staged to compare performance of new methods relative to previous ones

how to ensure that a new method can satisfy the same intended use(s) as the older one

what to do if a new method affects acceptance criteria for specifications that were established using the previous method.

CMC Forum Series

The CMC Strategy Forum series provides a venue for biotechnology and biological product discussion. These meetings focus on relevant chemistry, manufacturing, and controls (CMC) issues throughout the lifecycle of such products and thereby foster collaborative technical and regulatory interaction. The forum strives to share information with regulatory agencies to assist them in merging good scientific and regulatory practices. Outcomes of these meetings are published in this peer-reviewed journal to help assure that biopharmaceutical products manufactured in a regulated environment will continue to be safe and efficacious. The CMC Strategy Forum is organized by CASSS, an International Separation Science Society (formerly the California Separation Science Society) and supported by the US Food and Drug Administration (FDA). |

Regulatory Elements

Regulatory authorities generally encourage sponsors to adopt new technologies whenever feasible to enhance understanding of product quality and/or testing efficiency. That is what the “C” in CGMP means: current good manufacturing practice, in which quality control (QC) tests are part of a total control strategy to ensure that each product batch is high quality. Changes to analytics and specifications are an expected component of a product life cycle. But because of the potential for significant influence on existing product specifications in a sponsor’s regulatory findings, authorities need to know how each sponsor makes changes in analytical methods and what risk those changes might pose to product QC. Analytical testing provides data to support continuous developmental and manufacturing consistency throughout a product’s life cycle.

Regulatory Guidance

US Regulations |

FDA Guidance |

ICH Guidance |

Lokesh Bhattacharyya of CBER outlined current regulations and guidance documents that include regulatory expectations for making changes in existing analytical methods and managing such changes throughout a method’s life cycle. Although such regulatory requirements are formally applicable to CGMP testing of approved biotechnological/biological products, the basic principles should be used during product development. The “Regulatory Guidance” box lists documents relevant to US products, and the “Types of Changes” box details what constitutes major, moderate, and minor changes.

Types of Changes

| |

Moderate Changes

| |

Minor Changes

|

Source: FDA Guidance for Industry: Changes to an Approved Application: Biological Products (July 1997)

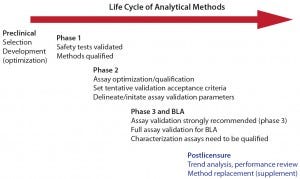

Figure 1: Life cycle of analytical methods (with permission from Laurie Graham)

Before method validation, performance history should be assessed to establish predetermined acceptance criteria for indicated validation parameters. Full CGMP validation of assays used for release, stability, and in-process testing is required for submission of a biologics license application (BLA). But to minimize the risk of potential measurement gaps or errors in critical data sets that support product specifications for release and stability (and process validation/verification), Graham strongly recommended validation of those methods before initiating major phase 3. After validation, method performance should continue to be tracked/trended to confirm that validated methods remain in a state of operational control.

A major principle of method life cycles is that analytical testing strategy evolves with process and product knowledge. In some instances, the evolving strategy includes making a change to an existing assay. Both CDER and CBER speakers encouraged adoption of new methods that improve understanding of product quality and stability or provide more robust, rugged, and reliable assay performance. Such a decision may be driven by a number of factors associated with the expected performance of a new technique:

Improved understanding of process or product characteristics

Enhanced process and product comparability assessment

Increased control of process consistency and product quality

Improved sensitivity or specificity of product-stability monitoring

Increased method control and robustness (fewer invalid tests)

Faster QC testing turnaround time (less hands-on time or fewer steps)

Reduced cost per test (lower cost reagents, higher sample throughput) • Replacement of a current method’s instruments, reagents, or materials being phased out by suppliers

Improved harmonization of methods across multiple testing sites or multiple product types

Introduction of new technology with improved measurement capabilities.

Both regulatory and industry participants strongly advised that risk assessment be performed to evaluate the impact of a method change in the context of an entire analytical control strategy to support product safety and efficacy. Some sponsors change methods that are reported in a current investigational new drug (IND), BLA, or new drug application (NDA) documents. Regulatory and industry speakers emphasized that those sponsors should consider effects on existing product specifications, total analytical control strategy, and testing laboratory operations.

The regulatory criterion for accepting a change is that the new method demonstrates performance capabilities equivalent to or better than the method being replaced for measured parameters. The proposed method should not be less sensitive, less specific, or less accurate for its intended use than the method it will replace. However, when it is not technically possible to meet this criterion, a sponsor should provide a data-driven justification for why it cannot be achieved. That justification should include context and other appropriate control strategy elements that support replacing a current method with an alternative method that does not perform as well the current method does in some respects. Under such circumstances, sponsors should discuss these issues with reviewers before submission.

In his talk, “Replacing Analytical Methods for Release and Stability Testing: A CBER Perspective,” Bhattacharyya acknowledged that implementing new analytical technologies that are more sensitive, selective, or specific than existing methods could open “Pandora’s box.” They may reveal features of a product that were previously undetected. The message to sponsors was to remain calm. He pointed out that if nothing changes in its manufacturing process, then a product should have the same composition that was proven to be safe and effective in clinical trials. When a new and more sensitive technology is used, and a new species/component(s) is detected or a new attribute identified, that does not automatically mean that a given product is deemed to have poorer quality or stability. ICH Q6B notes that biologically derived products typically have a high degree of molecular heterogeneity. Manufacturers select appropriate analytical methods to define the inherent pattern of heterogeneity for their products.

Bhattacharyya pointed out that a new technique simply may allow higher resolution of heterogeneities that were always present in a product but not detected by previous method(s). The typical approach is to use the new method to test retained samples from previous batches to determine whether the newly identified components/attributes were present previously. Characterizing new species allows comparison with what is known about both product and process from development studies. If a previously unseen component or attribute is considered to be a potential risk to safety, he said, “you would rather know and address it now before it may cause harm to patients if left undetected. FDA may ask you to do additional studies to show that it is safe.”

Graham noted that as sponsors increase their product knowledge and process understanding, she typically sees them move toward adopting methods for testing specific attributes: e.g., detecting specific posttranslational modifications rather than using general charge-separation assays. She recommended that if a new assay detects seemingly new product-related variants or process-related impurities, then information from retained product samples should be provided to demonstrate that the variants/impurities are not really new, only newly detected. Such data should be obtained by testing multiple lots of samples reflecting a product’s life cycle and by running both current and proposed assays in parallel for a period to generate real-time comparative data and assess their measurement capabilities.

Often when a method for purity determination is changed, purity values seem to decrease. Graham stated that this is “not the end of the world” for sponsors; it could be justified by an increased sensitivity of the new assay for product variants or degradants. A few case studies she presented illustrated such scenarios in which sponsors collected sufficient side-by side assay data to prove that their apparent decreases in purity did not reflect a change in product quality. But she also described the case of a proposed change in a potency assay that was deemed unacceptable. Data from stability studies (including forced degradation) showed for one product that the original cell-based assay was more sensitive to product degradation than the proposed ligand-binding assay would have been. Graham noted that in some cases the opposite was true: A ligand-binding procedure was more sensitive to product stability than a cell-based procedure (1).

Stability programs present a particularly challenging element of making changes in existing analytical methods in the midst of ongoing studies (2). Each stability batch has existing data sets linked to its time zero (t = 0) data. Changing a method midstream will break its link to the original t = 0 data collected with a previous method. Moreover, specification acceptance criteria applicable for stability are based on the analytical methodology originally in use in those studies. Bhattacharyya outlined a systematic approach to changing stability methods that includes assessing a proposed new method for comparable (or better) stability-indicating capabilities. It would also include real-time collection of target and accelerated stability data using both methods. A new method with better sensitivity/specificity for product degradation could affect existing stability acceptance criteria. In such cases, he recommends that sponsors draft a study plan for assessing their new methods and collecting sufficient data to support changing them — and potentially the acceptance criteria as well — in the stability protocols. Companies should discuss those plans with FDA regulators for concurrence before implementing changes.

Graham also mentioned that potency-assay changes would be required during product development if an initial assay were insufficient to monitor product quality relative to a therapeutic’s mechanism of action (MoA). She highlighted three typical IND comments from CDER regulators regarding potency assay changes necessary for monoclonal antibody (MAb) products (see the “Example IND Comments” box).

Example IND Comments from CDER Regulators on Changes in MAb Potency Assays |

Clinical Materials: “While the current potency assay is sufficient for initiation of clinical studies, a potency assay (with acceptance criteria) that reflects the primary presumed in vivo mechanism of action of [redacted] should be incorporated into drug substance and drug product lot-release and stability testing prior to entry into a major efficacy trial. Sample retains from the preclinical and clinical lots should be appropriately stored for use in the qualification/validation of this assay and to ensure lot-to-lot consistency with regard to potency.” |

Fc-Region Functionality: “The use of CDC as a potency assay is acceptable for early phase clinical trials. However, ADCC and phagocytosis (ADCP) are also indicated as being important for the mechanism of action of [redacted]. ADCC and ADCP require binding to Fc-receptors via the Fc-region of [redacted] and may be sensitive to changes in a MAb’s glycosylation pattern. The CDC assay may therefore not adequately control for this class of Fc-region dependent activity. For licensure, the product will need to be fully characterized for its Fc-region functionality, and lot release and stability assays will need to be developed that provide adequate control over [redacted]’s proposed in vivo functional activity.” |

ELISAs: “If the ELISA assay is to be used as the sole potency for licensure, data will need to be provided that demonstrate that the ELISA and bioassay have similar performance capabilities, stability indicating sensitivities, and that the biological information conveyed by these assays is comparable. The data would need to include, but not be limited to, information on whether changes in either the binding or Fc-regions that impact product activity or stability are detected with equal sensitivity by both assays. It was strongly recommended that if a decision is made to implement the ELISA assay as the potency assay for licensure, that both assays be used for release and stability testing throughout development to allow for the accumulation of a robust dataset that supports comparable performance and sensitivity of the two assays.” |

Graham also highlighted another common analytical method often changed during product development: the host-cell protein (HCP) enzyme-linked immunosorbent assay (ELISA). To provide more accurate measurement of process HCPs, most sponsors need to bridge from generic to specific HCP antisera (3, 4). A typical approach is to demonstrate adequate HCP coverage by the specific antiserum, validate the ELISA for that new immunoreagent, and then retest previous drug substance (DS) batches to replace generic ELISA values for residual HCP levels. In a case study she presented, however, at time of licensure CDER regulators found an HCP assay to be deficient with regard to HCP impurity coverage, so the sponsor committed to developing a new HCP assay. At the same time the sponsor implemented the new HCP ELISA, it also made changes to the manufacturing process. The postchange DS had higher HCP levels than the prechange DS lots, and data indicated that was related to the process change rather than the assay change. So the sponsor had to modify its new process further to improve HCP clearance back to acceptable levels.

In some case studies, a new method underperformed compared with the existing method but had to be implemented for other reasons. In such instances, an intended attribute might be compensated for by something else in the total process/product control strategy. Regulators stated that such trade-offs may be considered acceptable when supported by sufficient data and adequate risk assessment on maintaining product quality and process consistency. Graham said that with good process and product knowledge, sources of variability should be identified and a control strategy developed that uses appropriate and relevant analytics for in-process controls, release and stability tests, process monitoring, and product comparability assessments.

If a new method cannot perform as well as an older one, a sponsor yet may be able to justify removing the existing assay from its specifications. For example, information could be provided to support that the original assay did not provide meaningful data (e.g., does not measure a CQA) or present evidence that the tested product attribute is so well controlled in process that it no longer justifies routine measurement. However, Graham emphasized, process and product CQAs that are not adequately identified challenge sponsors to justify an appropriate control strategy.

Industry Experiences

Representatives of major biotech companies presented detailed case studies of their own experiences.

In “Bridging Methods for Analysis of Vaccines: Opportunities and Challenges,” Paul Dillon of Pfizer illustrated the method-bridging studies his company performed to support molecular mass analysis as a replacement for size-distribution testing. He outlined a highly systematic process of assessing new/improved methods from proof of concept through method qualification/validation to their impact on existing product specifications. Of particular emphasis were considerations in selecting the types and number of product samples to be included in a method-bridging study. The objective was to include samples to yield data that provide the greatest assurance of method equivalence across the entire range of possible results. Such a dataset includes representative commercial lots (e.g., n > 30 lots), stability samples within and beyond expiry periods, edge-of-specification samples, force degradation/aberrant samples, and samples from clinical experience. If results show that a new method is more sensitive and specific than the original, then that method could affect the existing specification range. If so, the company assesses correlation of both methods to establish a new specification range that can be confirmed to be acceptable by testing clinical samples. Dillon described the logistics of implementing a method change within Pfizer’s regulatory-affairs strategy and (if approved) into the company’s operational quality system.

Genentech speakers described a life-cycle strategy for a licensed potency assay to bridge changes from manual to automated procedures with a potency reference standard change (5). In “Replacement of a Commercially Approved QC Potency Assay,” Dieter Schmalzing and Wei-Meng Zhao highlighted elements of a coordinated life-cycle management system that includes method validation, assay training, method transfer, critical reagent control, cross-site assay monitoring, and ongoing technical support from subject-matter experts. This system allows for continuous improvement in potency methods through targeted technical enhancements, replacement of methods that have become inadequate, and retirement of methods no longer needed.

When designing a comparability assessment for potency assays, they noted, simply comparing validation data from two methods is insufficient to fully bridge assay performance capabilities. Validation studies are performed at different times by different personnel using different instruments, reagent sets, and so on — with only the reference standard in common. To minimize variances when comparing two methods, Genentech uses head-to-head testing (side by side) of the same sample sets, including lotrelease samples, stability samples, and stressed samples. The company also uses statistically relevant numbers of samples and runs for a high degree of confidence in the results. It also establishes predefined acceptance criteria based on existing specification and manufacturing capabilities.

In three highly detailed case studies (two assay replacements and one assay enhancement), Schmalzing and Zhao discussed specific issues encountered with making changes to potency methods. Their examples came from a legacy product that has been licensed for many years, having undergone several historical changes in instruments, materials, assay controls, and product-potency reference standards, and moved from manual to automated procedures.

In “Considerations for Implementation of an Analytical Control Strategy for Quality Attributes Discovered in Late Stage Development,” Frank Maggio of Amgen illustrated a risk/benefit assessment of two analytical techniques for monitoring methionine oxidation (MetOx): cation-exchange high-performance liquid chromatography (CEX-HPLC) and focused peptide mapping. Benefits of the CEX method were both technical (e.g., measures several attributes in a single method; more amenable to in-process samples) and operational (e.g., ease of use across global laboratories). But the method was not fully selective (basic peaks included other product variants such as C-terminal lysine), allowing for only indirect correlation of methionine oxidation with FcRn binding. Benefits of the focused peptide map technique were increased specificity, sensitivity, and precision regarding the MetOx variant, allowing for its direct correlation with FcRn binding.

Only a limited historical data set was available for assessing impact on specifications, however, and peptide mapping was procedurally more complex, which complicated its global implementation. Amgen chose to leverage the advantages of both methods in an integrated process/product control strategy using the CEX method for routine quality control (QC) testing for in-process, release, and stability samples. It uses the focused peptide map to support process development and conduct product characterization studies (including end-of-shelf-life samples) and includes both methods in comparability studies and reference-standard qualification.

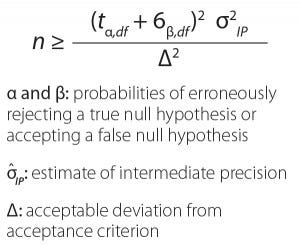

Equation 1: Impact of the number of test runs on method-bridging study (with permission from Miller and Yang)

In “Statistical Considerations for the Design and Evaluation of Analytical Method-Bridging Studies,” Kenneth Miller and Harry Yang of MedImmune detailed a statistical analysis approach for assessing method performance comparability. They explained that the main questions concern where a product is in its life cycle, whether the transition is to a new method or to an improvement on an existing method, and the type of improvement — either operational (e.g., time/resources) or performance (e.g., more specific or less variable). Answers to those questions dictate the type and rigor required of the analysis.

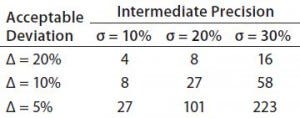

Table 1: Mean difference (% bias) example; required number of runs to achieve 80% chance of success (assume true mean % bias = 0)

Miller and Yang noted that not all analytical method changes are “apples-to-apples” comparisons, so it is important to understand the technical nature of assay differences. They highlighted common practices and problems in statistical analysis of data from method-bridging studies. The take-home messages were that MedImmune demonstrates similarity using equivalence as a research hypothesis, without concluding that if methods are not statistically different, then they are equal. If the company fails to reject a null hypothesis, then it considers whether that is due to insufficient evidence (e.g., study too small) or because the null was actually true. Miller and Yang illustrated the impact of sample size (number of runs) on confidence intervals for achieving an 80% chance of success comparing a given method’s intermediate precision with the amount of deviation from a given acceptance criterion (Equation 1, Table 1).

Panel Discussions and Open-Forum Questions

The following questions were posed to the speakers, panelists, and attendees to focus discussions on common challenges and concerns faced when making changes to QC analytical methods for biotechnology products. Inquiries from the audience are identified as such.

What factors should drive the need to consider one analytical technology over another? Sensitivity, specificity, cost, speed, cross-laboratory harmonization, and regulatory expectations are all important. However, just because you can shift to a new technology doesn’t mean you should. That decision should be based on the answers to these questions:

Do you already have in place an analytical tool kit for biotechnology product quality characteristics (per ICH Q6B and product-specific regulatory agency CMC guidances)? Is it part of a total control strategy with capabilities for impurities and degradants (platform methodologies used with other, similar products do apply)? If so, it may not be justified to add more tools solely for redundant monitoring of critical quality attributes (CQAs) and process consistency.

Are specific new species being reported as concerns with other similar products (yours or a competitor’s)? Is there a risk to product safety or efficacy? Is your tool set deficient for major characteristics and typical CQAs for the product type?

Are degradation pathways being missed by existing control-strategy analytics? It may be necessary to update and expand your analytical tool-set, bridging less capable methods to more capable ones.

For major changes made postapproval, a risk assessment should be performed that takes into account all elements that would be affected by the change. Answering a question from the audience about potential drug shortages, regulators noted that in some cases, the possibility could be an important factor in determining the impact and timing of method changes for certain critical health products. FDA regulators acknowledged that they consider availability of such products to be part of the benefit/risk assessment. However, it would not be the only consideration (e.g., if the change could also improve product quality).

Are those factors different for methods used in early and late-phase product development? For changes made to analytical methods in later phases or after licensure, a company must consider more stringent factors than for changes made early on. The more clinical and commercial product data that accumulate from methods used to test a product for release and stability, the larger is the historical data set that must be bridged.

The risk of having deficiencies in method capabilities — particularly selectivity and sensitivity of methods used in stability protocols — increases as clinical development progresses. The risk of missing impurities that could compromise safety increases as more patients are included in clinical studies. Also, data used to support claims for product stability and to establish proposed shelf life can be jeopardized if analytical methods are not suitable to detect product degradants.

For cases in which early phase methods are determined to be insufficiently sensitive, specific, or robust for later use, then continuing with them into later phases may present an increased risk to critical chemistry, manufacturing, and controls (CMC) data. Pivotal stability protocols and process validation are both extensive and expensive) and may be affected, as may be analytical data from lots used in clinical trials. Because those datasets are part of the basis for commercial specifications to be proposed in a product license filing, analytical deficiencies can influence regulatory review decisions.

Maintaining analytical methods that have exhibited chronic performance problems in early development becomes an operational risk over time. At product approval (or shortly after), a method typically is used more frequently and/or more widely in QC laboratories — often globally. Experience is gained more rapidly for an assay using different lots of reagents and multiple instruments and analysts. If the early method was minimally robust, and if system-suitability measures are insufficiently designed to catch performance problems in each run, then data validity is at risk. System-suitability measures may be adequate to prevent reporting of results from flawed (invalid) assay runs, but a high rate of method invalids can signal that a method is not in a state of true operational control. That can be greatly magnified in large-scale testing operations.

Rapid selection and qualification of analytical methods is a priority in early product-development stages, so some operational issues may be accepted because of time constraints. But by the time a sponsor launches commercially approved products, efficiency of operational factors becomes increasingly important to the QC laboratories conducting more release and stability testing than during clinical development. Development and/or qualification of platform methods for some parameters of similar products may provide a useful starting procedure. Moreover, leveraging operational experiences with platform analytical technologies could reduce the risk of performance problems if methods have been widely used in QC for other products. Size-exclusion HPLC (SEC-HPLC) and sodium-dodecyl sulfate polyacrylamide gel electrophoresis (SDS-PAGE) for MAbs are good examples. Both techniques for molecular-weight separation typically require minimal reoptimization when applied to other MAbs. Operational factors that affect SEC-HPLC or SDS-PAGE performance for one MAb generally affect others similarly. When considering a platform method, you should review the rate of invalid runs and assess operational challenges experienced with the existing version of that method in all QC labs where it is being used.

How should a method-bridging study be designed regarding the types of test samples to compare (e.g., intact, degraded, or impure) and numbers of product lots and method runs? Industry and regulatory experts agreed that you cannot solely compare validation data from two methods as a method-bridging exercise. A method validation is a “representative snapshot in time” of method performance using a subset of samples that span the entire specification range for an intended measurement. Often, validation data for an older method were generated using different instruments operated by different analysts at different times than are available in the current laboratory. It would be technically challenging to attempt a direct comparison of old validation data from one method with current validation data from a new method because software and platforms don’t necessarily allow for such a side-by-side comparison.

Typically the only common material included in a method validation study is the product reference standard. Although it can be valuable to track/trend a method’s historical data regarding that standard as a part of investigating ruggedness, the reference standard’s characteristics may be inadequate to represent all types of test materials for analysis (e.g., impurities and degradants). Forum presentations and discussions reiterated the need for method-bridging studies to include samples that encompass all features that could be present in test samples subjected to analysis. If different preparations of degraded or impurity-spiked samples were used in both method-validation studies, comparing the levels measured by each method would be compromised by differences in the amounts and types of impurities present.

The best experimental design is to evaluate directly the old and new methods using head-to-head assay runs for the best comparison of inherent method capabilities. In rare cases, it may be impossible to run such a comparison with split samples — e.g., because a laboratory that had been running the older method no longer can do so, or an original bioassay’s cell line is no longer available. Such circumstances would require much greater consideration of which historical data would be most relevant to compare and how they would be evaluated (e.g., statistical models). In head-to-head comparisons, selected sample types should span the range applicable to a method’s intended use. They should include:

Multiple product lots (drug substance and product, if both are tested by the method), including lots from the edges of the current specifications range

Product lots prepared with different degrees of purity, impurities, and potency (e.g., by spiking or diluting)

Real-time and archived (frozen) stability samples from target, accelerated, and stress conditions according to ICH Q2(R1) and ICH Q5C stability protocols

Forced-degradation samples (physical and chemical degradation pathways).

The number of product lots that should be examined during a method-bridging study depends on differences seen in method capabilities. When a new method detects new species not seen with the older method, additional product lots (particularly historical lots reflective of clinical materials) usually are analyzed to confirm the presence of those species. It may be necessary to establish new specification limits that incorporate new species, variants, or impurities detected by the new method. All available lots that had been tested with the old method then would be retested with the new method to generate sufficient data for justifying changes to product specifications.

Companies should consider the number of assay runs required to generate a statistically relevant comparative data set. Factors that affect that number include the precision and accuracy of the two methods, existing product acceptance criteria, and the degree of confidence desired in the conclusion. Related decision-making will be challenging if assay readouts and technologies are different. For example, an original method’s readout may have been colorimetric, but the new method’s readout is chemiluminescent, or the original method may be qualitative, but the new method is quantitative.

Criteria for acceptance of method-comparison runs should be established to define what constitutes “as good as or better than.” First, you are examining the nature of each method’s technology to determine whether the change is like-for-like in the way results are obtained. For some methods such as chromatography and electrophoresis, it is relatively straightforward to compare the selectivity of peaks or bands visually. Determining their inherent method comparability for detection of species would be possible even without a statistically powerful number of runs.

Particularly for nonseparation methods (assuming it is possible to directly compare their readout formats), it is best to conduct an equivalency correlation of results from both assays. This is particularly true for methods such as potency assays, which are typically not selective in measuring individual impurities or degradants but can demonstrate comparable sensitivity to impure or degraded materials.

Potency methods with a high coefficient of variation (%CV) for precision and intermediate precision can be challenging to performance comparisons. Bridging studies for potency methods exhibiting high %CV must rely upon statistically relevant numbers of test samples and assay runs to assess true performance capabilities above procedural variability. Failure to show difference does not mean equivalence, so the statistical approach used in bridging potency assays should be described clearly and justified adequately.

Of special note was the bridging of ligand binding and immunoassays. For ELISAs, binding characteristics of antigen(s) and antibody(ies) in existing and new methods must be compared to ensure similar (or better) capabilities of the latter for specificity of antigen–antibody reactions. Forum participants noted that bridging different host-cell protein (HCP) ELISAs is particularly complicated because regulators expect sponsors to demonstrate suitable specificity of different polyclonal antibodies using orthogonal methods to evaluate binding reactions.

If bridging data indicate that a new technology is a suitable and necessary replacement for one currently in use, how should that new method be phased in to existing release testing? Should there be a period of parallel testing using both methods? Which test should be used to release material? Do stability-indicating methods have critical timing considerations for ongoing and upcoming stability protocols? For bridging methods used only in QC release testing, sponsors are allowed and encouraged by health authorities to collect as much additional analytical data as possible using the new method in parallel with the existing certificate of analysis (CoA) method. The resulting set of dual real-time data will be valuable for justification of adjusting specification acceptance criteria if needed because the new method is more sensitive or specific to product quality attributes (PQAs).

For evaluation of product characteristics and/or method performance capabilities, a sponsor may decide to use tests that are under development “for information only” (FIO). FDA representatives indicated that such results may not have to be reported in an IND or BLA if they are run in addition to existing methods. Developmental methods may be discontinued without notifying regulatory authorities, even for clinical materials. However, sponsors should be able to justify why such a test was discontinued, including why it is not needed in the overall control strategy. If a new assay is determined to measure a CQA not covered by the current control strategy, then that control strategy should be updated and the regulatory authorities notified.

Forum participants highlighted particular challenges in bridging methods that are included in stability protocols beyond those for methods used only at the time of lot release. It is better to complete existing protocols with current methods and bring new methods into new protocols. When such a change is required, it should be planned thoroughly; otherwise, the link between early and late time points could be lost.

New methods can be added to stability protocols to collect FIO data in parallel/dual testing alongside current methods. Because ongoing stability studies are already allocated to ICH storage conditions, however, it is important to verify adequate amounts of stability samples remaining for each protocol to run both methods through the end of the study (to prevent cannibalizing later time points). As with approved release methods, companies cannot delete methods from stability protocols without permission granted by regulatory authorities.

Paul Dillon noted that Pfizer does not like to perform dual testing on formal stability protocols. Instead, the company does considerable method-comparison work up front that generates adequate data to allow introduction of methods only in new protocols. He acknowledged that this is a time-consuming process that can take up to three to four years, but it is worth the investment for Pfizer to assure continuity of stability data to support its product shelf-life claims.

How can you track/trend the history of a product’s test results across the transition from old to new analytical technologies? Most attendees reported that it is difficult to monitor method performance when the only metrics tracked are product CoA results. To focus on analytical methods, they track/trend data from reference standards, QC-check samples, and other performance parameters that are independent of the product lots being tested. Doing so helps prevent confounding of normal product variations with normal variations in method performance.

Many participants noted that it is particularly important to monitor the performance of a method after changes have been made to assure that it remains in an expected state of operational control. That is true even for methods that are qualified but not validated because performance results observed with a qualified method can help reveal the predetermined performance acceptance criteria required for method validation (6). New methods that show inconsistent performance or problematic trends relative to their predecessors should be identified and remediated to ensure that test results are reliable.

Most attendees reported tracking QC method invalidity rates to be sure that analytical methods are suitably robust. Methods with a high rate of repeat tests because of invalids may not be in operational control. One attendee encouraged strong communications between R&D and QC groups to keep on top of new methods and ensure that they are running as expected. Lack of operational robustness in a QC method is one issue that can trigger changing a problematic method.

Audience Questions

Is there a threshold for types of changes you can make to a method that would not trigger a reporting requirement or that would not require substantial data collection? This probably depends on the nature of a change. Does it change elements of the wet chemistry of a validated procedure or just its format? If the latter, how does the change affect accuracy, precision, linearity, and other features of the assay? A sponsor should design its data set to support the nature of a given change and return the method to a state of validation and control. It might be sufficient to track system suitability, but that depends on the type of procedural change.

One technique illustrated in the Genentech presentation involves comparing instructions for old and new assays at every step in their procedures, determining which elements change and which remain the same. That information is used to pinpoint exactly which procedural parameters would be most affected by a method change and identify which data would be critical to support a bridging assessment.

Who should conduct method-bridging studies? Industry and regulatory participants both felt that it was possible to conduct method-bridging comparative testing studies either in QC or in R&D laboratories. One caveat is that R&D labs must be able to run the same method standard operating procedures (SOPs) — sample preparation, standards and controls, reagents and materials, instrumentation, procedural steps, system suitability and data analysis — as are used in QC for both the old and new methods. However, when R&D labs run methods (even those in written SOPs), they sometimes introduce “minor” adjustments to an assay that are not in its SOP, assuming those do not affect the final data. For method-bridging studies, it is important to eliminate that practice; resulting data may not provide a realistic sense of operational capabilities for a procedure in a QC laboratory.

Does the degree of written procedural detail on a method in product filings affect notification to regulatory agencies for changes in a procedure? For example, if a method summary is vague, does the sponsor have greater latitude in making changes? Regulators said no, reviewers need enough detail for adequate communication about major elements of a method. Minimal detail in dossier sections does not release a sponsor from good manufacturing practice (GMP) requirements to have written method procedures that are subjected to internal QA change control. A draft FDA guidance provides more information on the degree of detail expected in BLA/NDA method summaries (7).

Two additional questions were posed by the audience but postponed for future discussions.

Is there a possibility of establishing a preapproved comparability protocol for method changes in the same manner as for process changes? The general answer was negative, but the question deserves more consideration. It could be a point of emphasis in future discussions between industry and regulators at CMC Strategy Forums and Well-Characterized Biologic Products (WCBP) meetings.

Is there a possibility of garnering coordinated regulatory review for global methods changes? FDA representatives indicated that the agency is aware of this problem and welcomes suggestions on how to improve the situation. Global methods changes are very challenging. It may require years to complete all regions, so changes for global products require careful consideration and planning. A plenary session at the 2014 WCBP meeting provided detailed examples of regulatory issues related to changing methods in global product submissions. And the FDA has discussed this with other regulatory agencies. Administrative issues would be a key concern (e.g., the impact cooperative efforts could have on agency deadlines). One agency representative suggested that our CMC Strategy Forums can play an important role in stimulating new ideas from industry on streamlining review processes. It may be that the CASSS global CMC Strategy Forums (in Europe, Japan, and Latin America) could help foster interagency communications as well as agency–industry dialog.

Expect and Plan on It

Forum attendees derived several main points to consider from these discussions. First, making changes in analytical methods is an expected part of normal life-cycle management of a control strategy, starting in product development and continuing through commercial production. FDA regulators encourage such changes. Performance capabilities of a new method must be compared with those of a previous method in a well-designed method-bridging (method comparability) study that includes a broad array of sample types that will challenge the new method for all its intended uses. Method performance parameters (including stability-indicating capabilities) of a proposed method should be demonstrated to be either the same as or better than those of the method being replaced.

It is not unusual to find differences in previously seen product characteristics with assay changes. If new species are detected with a new method, don’t ignore them; adopt a logical, strategic approach to assessing the impact on existing product specifications. This can include (but is not limited to)

Data from running the old and new methods in parallel for a period (especially in stability protocols)

Side-by-side data from both methods retesting multiple product lot samples retained throughout product development

Characterization information on newly detected species to establish their identity and assess their risk to product safety or efficacy.

Sponsors must provide information on analytical method changes to regulatory agencies based on current, applicable statutory requirements and regulatory guidance documents.

References

1 Rieder N, et al. The Roles of Bioactivity Assays in Lot Release and Stability Testing. BioProcess Int. 8(6) 2010: 33–42; http://c.ymcdn.com/sites/www.casss.org/resource/resmgr/imported/Bioassays%20January%202007%20 FINAL%20Paper%20June%202010.pdf.

2 FDA and Industry Wrestle with Vagaries of Biotech Stability. The Gold Sheet 39(10) 2005; http://c.ymcdn.com/sites/www.casss.org/resource/resmgr/imported/Stability%20 Gold%20Sheet%20Article.pdf.

3 Champion K, et al. Defining Your Product Profile and Maintaining Control Over It, Part 2: Challenges of Monitoring Host Cell Protein Impurities. BioProcess Int. 3(8) 2005: 52–57; http://c.ymcdn.com/sites/www.casss.org/resource/resmgr/imported/2005SEP_ BioProcess_Part2.pdf.

4 2015 CMC Strategy Forum on Host-Cell Proteins. BioProcess Int. (manuscript in progress); speaker and summary slides online at http://casss.site-ym.com/?CMCJ1513.

5 Early Investment in Characterization Pays Dividends, CMC Forum Participants Agree. The Gold Sheet 40(6) 2006; http://c.ymcdn.com/sites/www.casss.org/resource/resmgr/imported/Reference%20Standards%20Gold%20Sheet%20Article.pdf.

6 Ritter N, et al. What Is Test Method Qualification? Proceedings of the WCBP CMC Strategy Forum, 24 July 2003. BioProcess Int. 2(8) 2004: http://c.ymcdn.com/sites/www.casss.org/resource/resmgr/imported/Test%20Method%20Qualifications%20Article.pdf.

7 CBER/CDER. Draft Guidance for Industry: Analytical Procedures and Methods Evaluation — Chemistry, Manufacturing, and Controls Documentation. US Food and Drug Administration: Rockville, MD, July 2000; www.fda.gov/ohrms/dockets/98fr/001424gl.pdf.

Corresponding author Nadine Ritter is president and principal analytical advisor of Global Biotech Experts, LLC; nadine.ritter@ globalbiotech.com. Reb Russell is executive director of molecular and analytical development for Bristol-Myers Squibb Company. Timothy Schofield is a senior fellow in regulatory sciences and strategy, Kenneth Miller is a senior scientist, and Harry Yang is senior director and head of nonclinical biostatistics at MedImmune. Lokesh Bhattacharyya is chief of the Laboratory of Analytical Chemistry and Blood-Related Products at FDA’s Center for Biologic Evaluation and Research. Paul Dillon is a principal scientist in manufacturing sciences and technology at Pfizer Ireland Pharmaceuticals. Laurie Graham is an acting team leader at the FDA’s Center for Drug Evaluation and Research. Frank Maggio is a senior scientist at Amgen Inc. Dieter Schmalzing is a principal technical advisor, and Wei-Meng Zhao is a QC senior scientist at Genentech, a Member of the Roche Group.

North American Program Committee for These Forums

Siddharth Advant (Kemwell Biopharma), Yves Aubin (Health Canada), John Bishop (FDA-CBER), Barry Cherney (Amgen Inc.), JR Dobbins (Eli Lilly and Company), Julia Edwards (Biogen Idec), Sarah Kennett (FDACDER), Joseph Kutza (MedImmune, a member of the AstraZeneca Group), Kimberly May (Merck & Co., Inc.), Anthony Mire-Sluis (Amgen Inc.), Stefanie Pluschkell (Pfizer, Inc.), Nadine Ritter (Global Biotech Experts, LLC), Reb Russell (Bristol-Myers Squibb Company), Dieter Schmalzing (Genentech, a member of the Roche Group), Timothy Schofield (MedImmune, a member of the Astra Zeneca Group), Zahra Shahrokh (STC Biologics, Inc. and ZDev Consulting), Jeffrey Staecker (Genzyme Corporation, a Sanofi company), and Andrew Weiskopf (Biogen Idec) |

DISCLAIMER: The content of this manuscript reflects the group discussions that occurred during the CMC Strategy Forum, including possible points of disagreement among attendees. Further, this document does not represent official FDA policy or opinions and should not be used in lieu of published FDA guidance documents, points-to-consider guidance documents, or direct discussions with the agency.

Nadine Ritter, Reb Russell, Timothy Schofield, Laurie Graham, Paul Dillon, Frank Maggio, Lokesh Bhattacharyya, Dieter Schmalzing, Wei-Meng Zhao, Kenneth Miller, and Harry Yang

You May Also Like