Developing Process Control Strategies for Continuous Bioprocesses

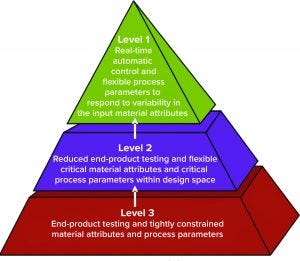

Figure 1: Control strategy implementation levels from traditional (level 3) to enhanced (level 1) approaches (1)

Process control enables biomanufacturers to ensure that operating parameters are within defined specifications. A control strategy should be established during early stages of process development while process and product performance are being defined using risk-based methods such as quality by design (QbD) and process analytical technologies (PATs). Confirming process control as an essential part of product development creates greater process knowledge and understanding and provides the first steps toward process optimization. By understanding how process performance relates to product quality, biomanufacturers can reduce time and cost burdens. But implementing control strategies for continuous and integrated processes is not as straightforward as for traditional batch and fed-batch systems, and advanced technologies will be needed to ensure control of an entire continuous process train.

At the 2019 BWB/BPI conference in Boston, MA, Christoph Herwig (founder and scientific advisor of Exputec as well as professor of bioprocess engineering at the Institute of Chemical, Environmental, and Biological Engineering at TU Wien) outlined three levels of control strategy implementation (Figure 1). Each level is defined by the degree of process-parameter flexibility in response to different manufacturing changes or variabilities. Herwig believes that most current bioprocesses are at level three: “Currently, we have low knowledge, especially for continuous bioprocesses, which mainly are run only by volumetric and recipe-controlled systems. Biomanufacturers are switching according to volume and traditional conditions without real control on many critical quality attributes (CQAs) and no real analytics or PAT tools involved so far.”

Such capabilities can be achieved by implementing elements in the second level: an enhanced approach to reducing end-product testing. “The second level is the digital-twin approach, in which we finally have more process knowledge and understanding, which is captured in a digital twin. And we can control based on a digital twin” (see the “Digital Twins” box).

Ultimately the industry’s goal is to reach level one, in which biomanufacturers can make real-time decisions and in which process parameters are flexible to respond to variabilities in input materials.

Digital Twins |

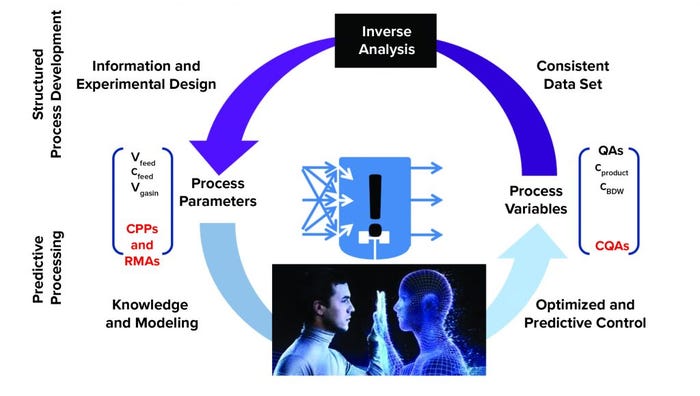

Biomanufacturers cannot measure everything responsible for controlling quality attributes, and many process parameters cannot be controlled simultaneously. Digital-twin technology accesses historical process data and current process conditions to enable substitutions of certain measurements or process variables that could be difficult to measure, thus making it possible to control elements that cannot be measured. With a digital twin, biomanufacturers have fewer measurements and a higher degree of freedom with process control. “You are not dependent on only a limited number of parameters. You can control any entity,” says Christoph Herwig (Exputec/TU Wein) (Figure 2). For example, most people now can control only “simple” parameters such as feed rate, temperature, pH, and glucose concentration in a bioreactor. However, quality attributes might be linked to physiological aspects of cells. “You have to control growth rate and substrate uptake rate, but those parameters cannot be measured. A digital twin makes parameters that traditionally cannot be measured available for control because the digital twin calculates those parameters.” Although digital twins can be a valuable tool for intensified processes, such technologies are needed much more for continuous processes because they are designed without the hold points of batch systems. Herwig explains that a batch process allows operators to finish a batch, measure the quality, and then continue with the next process step using control measurements from the previous step with in-process control (IPC) or even quality control (QC) analysis. However, continuous processes have no hold points, leaving no time to measure for QC. “A digital twin allows you to have those measuring elements in a timely manner. This is what process analytical technology (PAT) is about. According to the guidelines, PAT is a system of controlling quality attributes with timely control measurements. You finally can control a process in real time. For that, you need a real-time environment with digital systems so that the digital twin can run within a set time. Then, you feed the model with certain easily measurable variables, and the model of the digital twin derives the entities you can control in real time.” |

Understanding Continuous Processing

Continuous-mode operations have higher volumetric productivity compared with batch processes (e.g., more product from a bioreactor over a specific time). That is especially useful for monoclonal antibodies (MAbs) because the market for such products is a large part of the current biopharmaceutical industry. Full continuous mode also can be performed with equipment units that have smaller footprints, so factories can have smaller square footage than traditional facilities. Perfusion-mode production systems also can be used to increase productivity within smaller footprints. But as Peter Satzer (senior scientist at the Austrian Center of Industrial Biotechnology, ACIB) cautions, “[Perfusion systems] do not always reduce costs. Switching upstream from fed-batch to perfusion uses much more media because resulting titers usually are lower.” A hybrid process in which a fed-batch upstream process is connected to a continuous downstream process, for example, can be a way of getting the best of both process modes.

For some molecules that are unstable during fermentation, full continuous manufacturing allows operators to retrieve product from the reactor every day. For batch or fed-batch processes, a product would stay in a reactor much longer, potentially creating higher product variance that must be dealt with downstream.

Continuous systems generate a great deal of process data, which must be analyzed. (Figure 2) (3). “The complexity of how quickly you must analyze data is greater with continuous manufacturing,” says Lisa Graham (vice president of analytics engineering at Seeq). “You don’t just hold the batch, wait four hours, and then execute a control strategy. You don’t have a lot of time to check your continuous process because it’s moving rapidly from one unit operation to the next.”

Continuous manufacturing consists of interrelated unit operations. For a batch process a process control strategy could be designed around a single unit operation. But for continuous systems, the control of one unit operation affects what happens in the next. So a greater level of understanding is needed about the interactions of different steps in a continuous process.

“With continuous processing, you have a lot more things that you might be able to change,” says Satzer. “For example, if you’re having a continuous perfusion culture, you can change something during perfusion. And if the product performance you have in terms of either productivity or product quality doesn’t meet your standards today, you might be able to get the culture back on track the next day.” By contrast, fed-batch processes typically are defined by and compared with a “golden batch.” Batches typically are run the same way every day.

When the biomanufacturing industry first started developing continuous processes, one of the initial questions was how to define a batch. Most experts agreed that a batch could be defined over a specific time or by any other method according to the process itself. However, Satzer points out that the biopharmaceutical industry, unlike small-molecule and other industries, still is working to define where to draw the line between batches from a process production standpoint. “I think we can learn a lot in these terms from the small-molecules industry, which already has accomplished continuous processing. For biologics, we might be missing more process understanding by comparison. Batch definition also could be a cost factor. If you’re defining and testing your batches each week, then something goes wrong, you have to wait a week for testing, and you have to throw the production of a whole week away. If you define a batch that’s within a shorter time frame, then you just don’t lose that much material in case of a deviation.” However, the bioindustry still lacks understanding of the complete processes train, especially how a deviation in one unit operation translates through all other subsequent unit operations.

Satzer says the current industry is at the stage of setting up individual unit operations using mechanistic remodeling, empirical approaches, and statistical modeling rather than more accurate mechanistic models used in other industries. That lack of mechanistic understanding limits what can be achieved in terms of process control for continuous manufacturing. “You want to establish a good setup for your process design space, and you have to keep within that space because your models cannot be extrapolated if they are purely statistical.”

Figure 3: Digital-twin approach enabling predictive processing and modeling from acquired process knowledge and understanding; (CPP = critical process parameter, RMA = raw material attribute, CQA = critical quality attribute, (used with permission from C. Herwig) (2)

Tools and Technologies Needed

Continuous processes require a much higher level of control than batch processes. So a control system for a continuous process needs to correct changes from steady-state performance quickly and reliably. Parameters for batch processes often are measured off-line, but the same parameters for a continuous process must be measured on-line to provide timely information.

The ideal control strategy also provides a measure of robustness. “You don’t want to be operating in a way that places you on the edge of specification limits,” says Graham. “According to FDA guidance, a control strategy can include, but is not limited to, controls on material attributes, controls implicit in the design of the manufacturing process, in-process controls, and controls on drug substances. Process control should be done in close concert with a full understanding of each unit operation, both individually as well as in how they are connected in a continuous process. The closer the control variables are to the key component you’re trying to control, the better.”

Measuring a single input variable is a much more robust approach to assessing quality than controlling and correlating a combination of variables. “You want less reliance on correlation and more on direct measurements when possible. The goal is to go a step further and rely on analytics to provide causality. Understanding what’s causing x or y is important for a robust control strategy,” she notes.

The level of process control to be achieved also depends on the sophistication of the tools and technologies implemented. Advanced processes now include a high degree of digitalization and automation while also leveraging PAT and QbD.

“If you want to have complete process control for an entire process train, then you need a digital representation of that process,” notes Satzer. For example, a digital twin can be developed to gather real-time data and establish real-time model-driven control. If built before a process is set up, it also can be used for modeling. “We need a digital-twin–type of technology of the whole process for good process control because in a continuous process, all unit operations are connected all the time,” says Satzer. “If we understand our process well enough, and if we have some process analytical tools, we might be able to skip some of the analytics that we currently do for batch release or for understanding our product quality.”

Other technologies and methods still need to be developed further for complete process control. Although some CQAs can be measured on- or at-line, others require time for assessment. As Satzer observes, “Some assays testing viral and microbial contaminants, for example, still need time for growing the microbial control or for checking for levels of biocontaminants. For that, we need new, faster technologies — at least for completely continuous manufacturing and real-time release. Those CQAs currently are assessed with tests that can take up to 20 days, depending on the product. That’s of no use if you want to run that process continuously and control it in real time. A process cannot be controlled if you’re getting data 20 days late.”

Another approach would be to develop a process model that can predict the presence or absence of such contaminants using other measured values. Currently, biomanufacturers are working on methods to infer some CQA values that typically would take a long time to measure from other data gathered during a process. “That’s also the idea behind the QbD approach. You’re feeding all your process data and the data from on-line analytics into a model. And that model predicts those attributes that you cannot measure directly using online and at line tools.”

Suitable software platforms also can facilitate process control. As Satzer points out, “Currently, we are buying our unit operations one after the other. But they need to be connected to a common framework software-wise to be able to collect all data from the whole process and implement a process-wide control strategy.”

Graham agrees but adds that such measurement technologies also should be coupled with a robust process historian systems. She points out that the bioindustry needs tools that enable robust saving and capturing of process data, along with analytics that enable access to measurements in near real-time from those data historians. It’s important to implement a “robust knowledge-capture strategy to help streamline technical transfer of the process understanding from R&D to production.”

But the most important part of process controls are “tools that put those data right at the fingertips of the engineers and scientists,” says Graham. “Engineers in the past quite often had to find someone to give them access to stored data of interest, and then they used Excel to cut and paste those data to a spreadsheet and wrangle with it. By the time they came up with an answer, two more weeks may have gone by. And now their choice is to go cut and paste new data into a spreadsheet or to accept this as too time-intensive and take no action, missing out on opportunities for improvement. So when developing a control strategy, it’s extremely important that engineers be able to leverage their subject matter expertise easily in concert with the data.” Graham’s company couples data and the source of those data with engineers who know the process. “These engineers analyze the data to create, implement, and improve control strategies.”

From the BPI Archives |

Goby JD, et al. Control of Protein A Column Loading During Continuous Antibody Production: A Technology Overview of Real-Time Titer Measurement Methods. September 2019. Brower M, et al. Continuous Biomanufacturing: A New Approach to Process Scale. June 2019. Montgomery SA, et al. Making Downstream Processing Continuous and Robust: A Virtual Roundtable. June 2019. Cooney B, Jones SD, Levine HL. Quality By Design for Monoclonal Antibodies: Establishing the Foundations for Process Development, Design Space, and Process Control Strategies. June 2018. Rajamanickam V, Herwig C, Spadiut O. Data Science, Modeling, and Advanced PAT Tools Enable Continuous Culture. April 2018. Whitford W, et al. The 2017 World Biological Forum: Successes and Future Trends in Continuous Biomanufacturing. October 2017. Holzer M. Is Continuous Downstream Processing Becoming a Reality? May 2017. Scott C. Continuous Processes: Disposables Enable the Integration of Upstream and Downstream Processing. May 2017. Munk M. The Industry’s Hesitation to Adopt Continuous Bioprocessing: Recommendations for Deciding What, Where, and When to Implement. April 2017. Schmidt SR. Drivers, Opportunities, and Limits of Continuous Processing. March 2017. Sherman M. Continuous Cell Culture Operation at 2,000-L Scale. November 2016. Monge M. Deciding on an Integrated Continuous Processing Approach: A Conference Report. June 2016. DePalma A. Special Report on Continuous Bioprocessing: Upstream, Downstream, Ready for Prime Time? May 2016. Mothes B, et al. Accelerated, Seamless Antibody Purification: Process Intensification with Continuous Disposable Technology. May 2016. |

Learning from Others

At the 2019 BWB/BPI conference in Boston, Graham’s presentation focused on lessons that the bioprocess industry can learn from other industries, including quality throughput, process robustness, knowledge management, and real-time process control. She says her purpose was to show that process data can be managed where they are being obtained, without moving that information to separate spreadsheets. “The food and beverage industries, for example, conduct real-time monitoring and control to maintain quality of their products. I presented a case of how the beverage industry controls the quality of its products in real time, using PATs and process-control strategies to handle significant volumes and ensure food safety.”

She says that people sometimes challenge her when she points out that other industries conduct real-time control, arguing that the biopharmaceutical industry is more complex. So she chooses her examples carefully. “Until a few years ago, it was common to hear people in biomanufacturing say, ‘The process is the product’ and to not change anything because we never would know how those changes would affect the product. We didn’t have a direct measure for near real-time characterization, quality-control testing, and disposition of biologics protein attributes, so the industry had to infer product quality from many other measurements and process variables.”

A breakthrough came when Amgen released its real-time mass spectrometry measurement of protein-quality metrics. Amgen since has shared more about that technology to get the biopharmaceutical industry looking at real-time mass spectrometry as a new approach. “When I think about where the bottlenecks have been, I realized that we’ve had some technology breakthroughs like this in the past few years that have opened the door to help move the industry toward real-time control.”

References

1 Yu LX, et al. Advancing Product Quality: A Summary of the Second FDA/PQRI Conference. AAPS J. 2016; doi:10.1208/s12248-016-9874-5.

2 Sagmeister P, et al. Bacterial Suspension Cultures. Meyer HP, Schmidhalter D, Eds. Wiley: Hoboken, NJ, 2014.

3 Graham L, Wareham L. Leveraging Data Analytics to Support Merck’s Journey in Continuous Manufacturing. OSIsoft PI World Conference, San Francisco, CA, 2019; https://cdn.osisoft.com/osi/presentations/2019-uc-san-francisco/US19NA-D2LS06-Merck-Wareham-Leveraging-Data-Analytics-to-Support-Mercks-Journey-in-Continuous-Manufacturing.pdf.

Maribel Rios is managing editor at BioProcess International, part of Informa Connect; [email protected].

You May Also Like