Automated Closed-Loop Solution for Bioreactors and FermentorsAutomated Closed-Loop Solution for Bioreactors and Fermentors

May 1, 2008

Today, there is much discussion regarding the promise of improved insight into bioprocess industry processes. Look to the pages of industry publications such as this one, and you’ll see that industry leaders in process measurement and control have begun to discuss openly the potential for simulating and modeling bioprocesses.

“Important opportunities such as the application of mass spectrometers, dissolved carbon dioxide probes, and inferential measurements of metabolic processes have come to fruition today opening the door to more advanced process analysis and control technologies,” says Greg McMillan with Emerson Process Management.

However, speak to such professionals for any length of time, and you’ll learn that unlike processes associated with petroleum manufacturing, for example, which have been modeled for nearly 40 years, biotechnology involves living processes, which are much more challenging to predict. But if the industry is going to improve its process reliability and product quality while reducing operating costs, such prediction is necessary. Only by doing so can we take bioprocessing to the next level.

The PAT Initiative

In recognition of the needs of the biotech industry and the role technology can play in meeting those needs, the FDA put forth the process analytical technology (PAT) initiative. The petroleum industry initiated PAT over 20 years ago, when it was accompanied by operational excellence (OpX), as is now the case in biotechnology. Whereas PAT provides a framework for implementing new technologies for process improvement, OpX makes the business case for such implementation.

To better understand the role that PAT will play in the future of bioprocessing, consider the role it is playing in the products being offered. “Simulation and modeling tools are at the heart of Emerson’s efforts to incorporate the PAT initiative into its product offerings,” says Terry Blevins, also with Emerson Process Management.

A review of technologies most often discussed in the framework of PAT and OpX finds a common thread: process data. That’s the real- or near–real-time collection of process measurements from which immediate analyses can be made and requisite actions taken to ensure process reliability and product quality. However, only in the past five years have required process controllers, process analyzers, and analytical software tools begun to appear in process development laboratories and manufacturing suites of the biopharmaceutical industry. Even so, a strategic component was needed if process data were going to be available in real or near real-time: an automated process sampling system.

Process Analyzers Are Key

Groton Biosystems recognized that if the industry was going to achieve its next level of growth, it would need to improve process reliability and product quality while reducing operating costs. Our vision was confirmed as the industry began to invest heavily in process analyzers capable of measuring not only the traditional pH, dissolved oxygen, and temperature, but also glucose, cell viability, osmolality, and so on. These analyzers proved critical to any successful implementation of process simulation or modeling because they provide the requisite information from which analytical software tools can extrapolate data and predict process behavior.

Figure 1: The PAT initiative seeks to take bioprocessing beyond the days of manual sampling (above) and time-intensive laboratory testing. ()

“With the predictive control tools that Emerson is using, we believe we will be able to do inline corrections, looking at the model and deducing the direction that the process is taking,” says Scott Broadley of Broadley-James.

Bridging the Gap: Before process analyzers, predictive models were relying heavily on mathematics to predict behavior and not actual process data, which worked well in modeling nonliving processes such as petroleum refining, but failed miserably when applied to a living bioprocess. Even as they were recognized for the critical role they would play, those process analyzers proved to be highly proprietary — and as such they have acted as islands of process data to which a bridge needed to be built. That bridge is the Groton Biosystems Automated Reactor Sampling (ARS) system. Before its introduction, there were basically two sampling options:

Manual sampling, which was labor intensive and unable to respond to rapid process changes, introduced data collection errors, and was prone to contamination.

Proprietary automated sampling that interfaced to just one instrument, could be used only for upstream applications, and was also prone to contamination.

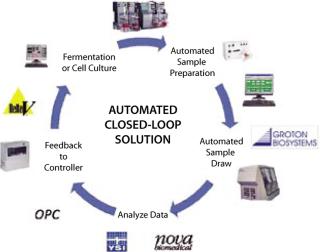

Our introduction of the ARS system redefined the expectations and capabilities of auto sampling. It is an “open” system capable of being interfaced to an array of process instruments including nutrient monitors, HPLC systems, cell counters, osmometers, and so on. Because of its ability to interface with HPLC systems, the ARS system can be used in both upstream and downstream applications. The system can prepare its own samples, which greatly reduces the risk of process contamination. It can transfer data electronically, which eliminates errors that can occur in data transcription. And the system can be interfaced directly with a process controller. Additionally, the ARS system can be networked with up to four process analyzers and actively monitor up to eight reactors at a time.

Figure 1: Filtration options ()

However, like any bridge, the ARS system must deliver originating data to its useful destination. To ensure this, Groton embraced the role of the process controller, whether it be a distributed control system (DCS) or programmable logic controller (PLC), as well as its associated software for process analysis and control. Thus, an ARS system can communicate process data in two distinct formats:

Analog (a method whereby collected process data can be transmitted as standard voltage or current signals either directly to a process controller or by means of an I/O gateway, which collects analog signals and retransmits them to the process controller in a fieldbus protocol such as DeviceNet or Profibus DP)

Ole for Process Control, OPC (a method whereby collected process data is communicated directly to a process controller on the OPC protocol, by which a great deal of data can be communicated with very little wiring at greatly reduced cost).

Figure 1: ()

Of those two formats, OPC has proven itself to be the technological leader. A Microsoft product developed specifically to enable communication between process devices, it enables the coupling of disparate systems together and with that introduced the ability to communicate not just process data but also process commands such as sample, clean, calibrate, and so on. To date there are more than 300 OPC interfaces commercially available and more than 100,000 OPC installations worldwide in a host of industries including life sciences, petroleum refining, and specialty chemicals

As the biopharmaceutical industry wrestles with challenges such as scale-up, contract manufacturing, extended length runs, increased yields, rising costs, and increased regulations, the role of technologies such as process modeling and simulation should continue to grow. Today the technological hurdles that have been addressed in the ARS System from Groton Biosystems can deliver perhaps the most critical of information: insight into your process. With that, you can already begin to improve process reliability and product quality while reducing operating costs.

You May Also Like