From Chips to CHO Cells: IT Advances in Upstream BioprocessingFrom Chips to CHO Cells: IT Advances in Upstream Bioprocessing

WWW.GRAPHICSTOCK.COM

Advances in our capabilities for data acquisition, storage, and manipulation are providing the biopharmaceutical industry with an increased understanding of what must be controlled in bioproduction as well as the ability to control it. Developments in hardware, processing algorithms, and software are changing the landscape of bioprocess administration.

Increased power for information gathering and processing began with the remarkable increases in microprocessor speed, pipelining, and parallelism over the past couple of decades (1); it continues with advances in data handling and manipulation routines as well as device interfaces (2). That foundation supports brilliant new software implementing the highest mathematical, statistical, and logical algorithms. For example GE Healthcare’s UNICORN system control software provides built-in knowledge for planning and controlling runs, and it analyzes results from a number of bioproduction operations. Such advanced software is suitable for use in FDA-regulated environments and provides traceability and convenient backup functionality. Together, these developments are enabling remarkably higher bioprocess understanding, development, and control.

Process Understanding

With the advent of the quality by design (QbD) paradigm, data management and process modeling, monitoring, and control have increased in importance throughout the biopharmaceutical industry (see the “Design and Control” box below).

BioProcess Design and Control |

|---|

For a more detailed discussion of these new concepts in bioprocess design and control, see BioProcess International’s March 2008 supplement. Articles by Christian Julien (currently with Meissner Filtration Systems) and BPI editorial advisor William Whitford (currently with GE Healthcare Life Sciences) discuss the new era in bioprocess design and development, designing for process robustness, and operational excellence through quality by design (QbD). Other contributors discuss process analytical technology (PAT) tools for accelerated process development and improvement as well as bioreactor/fermentor automation. |

Systems-revealing procedures — such as high-content analysis (HCA) and hierarchical cell structure (HCS) — use data-generating instrumentation such as digital microscopy and flow cytometry. But providing the higher level of analytics expected by regulatory authorities absolutely requires fast memory and processing power to manage the large amount of data generated. Regulatory expectations also require advanced software to manipulate those data and apply, for example, the latest understandings in cell biology that make the many reported values meaningful. Analytical software used to be generated by researchers themselves, but as time went on it began to be provided by third-party vendors. HCA equipment providers now supply their own sufficiently robust software packages to provide useful analysis across a wide array of applications.

For example, GE Healthcare’s IN Cell Investigator software offers a comprehensive solution for automated high-content analysis of live and fixed cell assays. It can measure such processes as the cell cycle, receptor internalization, and micronucleus formation. This package provides a range of quantitative cell, nuclei, and vesicle measurements. It can even track cell movements and monitor parameter changes in individual cells over time. Such programs support a broad range of assays and applications, with preconfigured analysis modules and flexible protocol editors. Ultimately, they support rapid decision-making based on complex, multiparametric data.

Five Vs and Big Data |

|---|

The phenomenon of “big data” — which refers to information sets that are so large and complex that traditional data-processing methods are inadequate to handle them — is changing the world. It is often described using five V-words: value, variety, velocity, veracity, and volume. Challenges in handling information include analysis, capture, data curation, searching, sharing, storage, transferring, visualization, and privacy/security. The term big data itself usually refers to the advanced methods of extracting value from huge data sets rather than merely the amount of data itself. Accuracy in big data interpretation can aid in decision making, ultimately improving operational efficiencies, reducing costs, and lowering risk in bioprocessing. |

Volume refers to the vast amounts of data that are generated every minute. It’s often illustrated by the fact that >90% of the world’s data have been created in just a few years. Velocity refers to the speed at which new data are generated and moved around. Variety refers to the different forms of data that are collected and used.Veracity implies the uncertainties associated with data inconsistency and incompleteness and the resulting need for organization of information. And value is what effective data mining and analytics can make of the massive amounts of data that are collected. |

IBM originally identified four Vs in the early days of big data: volume, velocity, variety, and value. And recently, some experts have added more V-words to the list, such as visualization and variability. Those are both of particular interest in bioprocess control. For example, processed data need to be presented in a readable and accessible form. And variability is inherent in biological systems, and thus in the information that they generate. |

Sources1 Knilans E. The 5 V’s of Big Data. Avnet Advantage: The Blog. 29 July 2014; https:// www.linkedin.com/pulse/2014030607340764875646-big-data-the-5-vs-everyone-mustknow.2 McNulty E. Understanding Big Data: The Seven V’s. Dataconomy 22 May 2014; http://dataconomy.com/seven-vs-big-data. |

Process Development

The currently predominant strategy in upstream bioprocess development is to perform a large number of experiments in parallel while collecting a large amount of real-time process data. Depending on the culture methods used, a dozen values or more (for such parameters as dissolved oxygen and pH) are obtained, monitored, and adjusted in real time. In modern bioprocess control, more values are being considered as control parameters.

Lean product and process development (Lean PPD) theory and issues of “big data” are influencing the development of bioprocess knowledge as much as any other field of life science. Therefore, the enabling features of such advances as solid-state drives (SSDs or “flash drives”) and cloud computing are very important to process understanding and control efforts in biopharmaceutical development.

Heightened bioprocess knowledge and establishment of critical control parameters (CCPs) are supported by advanced scale-down models and algorithms as well as statistics-based analysis and design approaches. The emerging field of analytical QbD encompasses advanced process engineering techniques, new analytical equipment, testing capabilities, and science-based approaches (3, 4). The classic five “Vs” of generated data (see the “Five Vs” box) prohibit use of many old familiar tools and methods. Considering the volume, variety, velocity, variability, and veracity of data generated requires companies to look for and use the latest solutions available.

Experimental Design: Analytical and process development engineers generally acknowledge that the old days of trial and error and single-step iteration testing simply cannot keep up with today’s demands. The biopharmaceutical industry is experiencing rapid development and currently embracing design of experiments (DoE) and response–surface methodology (RSM) as more systematic approaches to process understanding.

By allowing fewer experiments using a fractional-factorial approach to generate high-quality data, DoE can help operators valuate which process inputs have the most significant impact, identify key parameters, and investigate interactions among those parameters. In helping to characterize acceptable ranges of key and critical process parameters, it helps companies build statistical models to identify a “design space” in which their processes can be documented to provide reproducible results. Although DoE cannot handle very high multiples of simultaneous element/factor inputs required with some complex systems. Newer tools are appearing in this arena. Novel offerings range from software packages that provide intuitive user interfacing, improved method editing, and integrated statistical functionality to radical new systems-theory–based process development algorithms.

Biological scientists and engineers might struggle to understand such complex statistical and experimental approaches. Some commercial solutions can address their needs and help them apply such advanced tools most appropriately and efficiently for their applications. Suppliers are integrating risk-analysis visualization to improve on classical DoE contour and sweet-spot plots, for example. Other features help companies identify where risks for failure can be found in their processes as well as determine the probability of achieving desired results according to proposed criteria. These tools and services can assist in establishment of true QbD and estimation of design space by taking into account uncertainties and variations in parameters, measurement systems, and processes. Furthermore, data-management solutions are becoming available for transforming large amounts of data, batch data, and time-series data into sets of information formatted for easy interpretation to enable decision-making and control actions.

DoE has no doubt advanced bioprocess development. However, it is not especially efficient for handling very complex and new systems. The approach struggles to meet our increasing demand for more efficient process development and design approaches. DoE is performed sequentially using a screening–characterization–optimization (SCO) strategy, which can be time consuming and invites bias because it heavily relies on an analyst’s experience and judgment.

Directional Control Technology (DCT): To address those limitations, a new tool has been developed. DCT is based not only on mathematics, but also on the principles of modern system theory (such as synergetics) and fuzzy logic. Its power in bioprocess development comes from such unique features as the simultaneous screening and optimization of the value of selected parameters. DCT applies regardless of the complexity of a system, even one with nearly 100 parameters, as does Chinese hamster ovary (CHO) media development and optimization. Based on a dynamic capacity to determine and analyze each parameter’s contribution to the outputs, DCT results in a few expertly designed experiments (usually fewer than than 10 experiment conditions) in developing a solution.

An example of DCTs power and efficiency can be found in its recent application to the development of a cell culture process with 67 variables, including process parameters and nutrients (5). Using only six experiments, researchers used the DCT approach to increase the yield of a production target protein by 267%. In the same project, application of DoE resulted in a design of 40–50 required experiments, with a final process yield that was 30% lower than what was achieved using DCT design.

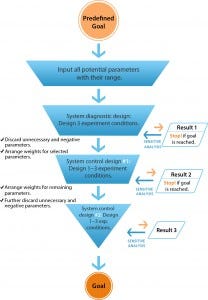

Figure 1: The workflow of directional control technology (DCT)

DoE and other conventional approaches break down complex systems into subgroups of parameters. But DCT begins with opening a system by including all potential variables related to predefined goals (Figure 1) to establish an initial pool of parameters. Each parameter from that initial pool is included in the first run of experiments designed with three to five different conditions: “system diagnostic designs.” That delivers a

much wider field of initial parameters and expands the potential of a system’s behavior. As a result, DCT is much more likely to reveal the optimum configuration of parameters at a much earlier stage in process development.

Results from those three to five experiments are used as “feedback” to conduct a sensitivity analysis for each parameter for determining its impact on final system outputs. Only influential and necessary parameters are selected for experimentation, and the weight of their values is based on their respective contribution to process efficiency. So each parameter is examined in the whole system and “judged” only by its performance in that system. The final one or two runs of these system-control experimental conditions are based on results of previous experiments to further organize the parameters and control the system toward achieving predefined objectives (Figure 1).

DCT can address multiple outputs, such as quality attributes within the few experiment designs, as long as those outputs can be quantified. CPPs also can be identified and ranked for further process control.

By significantly reducing the number of experiments needed to provide better and more reliable results than even DoE can provide, DCT could become an efficient new tool for meeting the increasing needs of rapid process development, especially with new and very complicated systems. Nevertheless, it still addresses only the question of “how” to optimize a system most efficiently but not “why” any individual parameter is effective in doing so (from a biological perspective). Also, as an empirical approach, DCT doesn’t build a mathematical model for prediction. This leaves some space for combining the DCT and DoE approaches.

In both process development and control, bioprocess analytics are turning toward near and fully real-time monitoring. The trend is demanding increased processing power, interface capabilities, and data filtering/analyses methods. Cloud computing and hosting also is changing the way companies approach component interfacing, communication, and service architecture.

Process Control

For many distinct reasons, bioprocess control systems continue to demand more speed and more storage for large amounts of data. Control algorithms themselves have become more data-hungry and increasingly need more processing power for their mathematical operations. That is the case whether a system operates as a traditional controller based on mathematical models — such as the well-known proportional–integral–derivative (PID) controllers — or involves such newer approaches as “fuzzy expert” systems.

Modern bioprocess control also invokes a number of goals beyond the immediate control of a single unit operation. Requirements of modern controllers have expanded to include defense against Stuxnet-type invasions (see the “Stuxnet Worm” box), heightened human–machine or man–machine interfaces (HMIs or MMIs), and user-interfaced supervisory control and data acquisition (SCADA) control programming. Other memory- and processing-hungry aspects include flexibility in application, network capabilities, open (plug-in) interfacing, easy handshaking, and coprocessing.

Stuxnet Worm |

|---|

The Stuxnet computer worm was designed to attack industrial programmable logic controllers (PLCs). It functions by targeting machines that use the Microsoft Windows operating system and related networks, then seeking out Siemens Step7 software. In addition to the attacking “worm” payload, the program includes a link file that automatically executes propagated copies of itself and a rootkit component that hides malicious files and processes to prevent its detection. Typically introduced to a target environment through an infected USB flash drive, Stuxnet then propagates across that network, scanning for vulnerable PLC software. If none is found, the worm becomes dormant in a computer. If activated, Stuxnet modifies Step7 software codes and introduces new commands to the PLC while returning a loop of normal operations feedback to users. |

This worm was reported to have compromised Iranian PLCs in 2010, collecting information on their industrial systems and destroying centrifuges involved in nuclear-material refinement. Experts say that Stuxnet’s design and architecture could be tailored to attack modern supervisory control and data acquisition (SCADA) and PLC systems such as those used in bioprocessing. Researchers at Symantec have uncovered a version of the worm that was used to attack Iran’s nuclear program in November 2007, having been developed as early as 2005, when the country was just setting up its uranium enrichment facility. They reported computer infection rates of 59% in Iran, about 18% in Indonesia, over 8% in India, and about 3% in Azerbaijan. Elsewhere the infection rates were below 2%. |

Many experts have speculated this to have been an act of cyberwarfare on the part of Israel, perhaps in collaboration with the United States, but there is no definitive evidence to support that conclusion. Variants and related computer worms have been identified since Stuxnet became publicly known. These include Duqu malware in 2011 and the Flame program in 2012. Clearly this is not an isolated phenomenon and should be considered an important trend in cybersecurity. |

Reference1 Stuxnet. Wikipedia 28 May 2015; http://en.wikipedia.org/wiki/Stuxnet. |

To support in-, at-, and on-line analysis, in situ probes and methods for sterile, cell-free sampling involve an increasing range of analytical systems. These include autosampled biochemistry analyzers, flow-injection analysis, and at-line high-performance and ultraperformance liquid chromatography (HPLC and UPLC). Using them demands device handshaking, data manipulation, and measured-value processing.

In a multiuser environment made up of many systems, networking supports data sharing and collaboration among many users and systems to allow monitoring and control even at a distance. In a manufacturing environment, this usually includes a single familiar interface for all cell culture, chromatography, and membrane/filtration separation operations — both upstream and downstream processing. It should be compatible with all relevant sections of 21 CFR Part 11 (6) and support efficient control of processes, rapid data evaluation, and custom reporting functionalities.

All these advances are being supported by SCADA-based operations of commercial, off-theshelf (COTS), real-time operating systems. They can increasingly provide multiplexed bioreactor control and plant-wide resource monitoring in multiproduct bioprocess facilities (7).

Balancing Security with Functionality

Advances in our capabilities for data gathering and processing have followed developments in microprocessors, algorithm design, and data-handling software. That in turn is leading to significant gains in bioprocess understanding, development, and control. But it has also brought about heightened demands and constraints in intellectual property, systems security, and regulatory compliance.

Those issues are immediately apparent with newer “cloud-based” systems. Meanwhile, the increasing importance of mobile data is presenting further problems even as it enables collaboration and outsourcing (8). And the open-source question has arisen for bioinformatics software (9). Biotechnology is based on life sciences, so companies cannot forget the needs of academic researchers, who provide many of the breakthroughs from which their own products and services arise.

Although the principles of 21 CFR Part 11 remain (6), many industry experts have identified the need for changes, updates, and even further investigation of its directives. Much of their concern can be traced back to consequences of developments in information technologies themselves. A Part 11 Working Group at the FDA is currently working on a revision of the “electronic signatures, electronic records” rule.

Acknowledgment

We are much indebted to William G. Whitford (bioprocess strategic solutions leader at GE Healthcare Life Sciences) for his invaluable help and guidance.

References

1 Patterson JRC. Modern Microprocessors: A 90-Minute Guide. Lighterra: Queensland, Australia, May 2015; www.lighterra.com/papers/modernmicroprocessors.

2 Schmitt S. Information Instead of Data: User-Friendly HMI Concept Increases Process Control Efficiency. BioProcess Int. 13(5) 2015: 42–46.

3 Krishnamurthy R, et al. Emerging Analytical Technologies for Biotherapeutics Development. BioProcess Int. 6(5) 2008: 32–42.

4 Apostol I, Kelner DN. Managing the Analytical Lifecycle for Biotechnology Products. BioProcess Int. 6(8) 2008: 12–19.

5 Fan H. A Novel Method for Fermentation Process Optimization in Complex System. BioProcess International Conference and Exhibition: 26–29 October 2014. IBC Life Sciences: Westborough, MA.

6 Avellanet J. 21 CFR Part 11 Revisited. BioProcess Int. 7(5) 2009: 12–17.

7 Chi B, et al. Multiproduct Facility Design and Control for Biologics. BioProcess Int. 10(11) 2012: S4–S14.

8 Ebook: Biopharma’s Balancing Act: How Firms Are Managing Information Access and Security. Fierce Biotech IT March 2015; www.fiercebiotechit.com/offer/informationand security?source=share.

9 Vihinen M. WorldView: No More Hidden Solutions in Bioinformatics. Nature 521(7552) 2015: 261; www.nature.com/news/no-more-hidden-solutions-in-bioinformatics1.17587?WT.ec_id=NATURE-201505.

Further Reading

Balakrishnan A, Theel K. A Smarter Approach to Biomanufacturing: Achieving Enterprise Manufacturing Intelligence While Optimizing IT Investment. BioProcess Int. 8(5) 2010: 20–24.

Brown F, Hahn M. Informatics Technologies in an Evolving R&D Landscape. BioProcess Int. 10(6) 2012: 64–69.

Jain S. Process Effectiveness Analysis: Toward Enhanced Operational Efficiency, Faster Product Development, and Lower Operating Costs. BioProcess Int. 12(10) 2014: 18–20.

Neway J. Manufacturing Culture: Inspiring Advanced Process Intelligence. BioProcess Int. 11(2) 2013: 18–23.

Quilligan A. Managing Collaboration Across the Extended Organization. BioProcess Int. 12(6) 2014: S18–S23.

Sinclair A, Monge M, Brown A. A Framework for Process Knowledge Management: Reducing Risk and Realizing Value from Development to Manufacturing (Part 1, Conceptual Framework). BioProcess Int. 10(11) 2012: 22–29.

Snee RD. Think Strategically for Design of Experiments Success. BioProcess Int. 9(3) 2011: 18–25.

Tatlock R. Manufacturing Process Automation: Finding a Pathway Forward. BioProcess Int. 10(1) 2012: 12–15.

Helena W. Fan is vice president of business development at Effinity Tech, 17046 Silver Crest Drive, San Diego, CA, 92127; 1-858367-8878; [email protected]; www.effinitytech.com.

You May Also Like